Peer-to-Peer Networks

Introduction

This article is a compilation of research regarding peer-to-peer networks that was later used to create an interactive education museum exhibit. You can find out more about the exhibit in this article and view the exhibit itself here: https://www.jbm.fyi/roughguide/

What are Peer-to-peer networks?

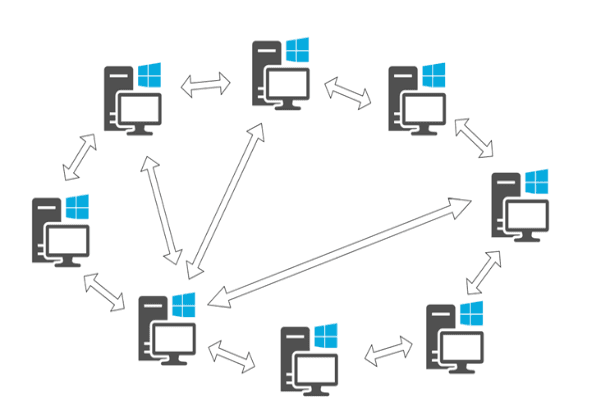

Peer-to-peer, Peer2Peer or P2P networks are distributed networks of clients who are all equally privileged and equipotent participants in the network. Clients are called 'peers' or 'nodes', and each make a portion of their resources, such as processing power, disk storage or network bandwidth, directly available to other participants of the network without the need for any intermediaries or centralised entities. These shared resources are required in order to provide the services and content offered by the network. In 'pure' peer-to-peer networks, any single arbitrary node can be removed from the network without the network experiencing a loss in service. 'Hybrid' peer-to-peer networks are peer-to-peer networks where a centralised entity is required to offer some parts of the network service. This contrasts to a traditional client-server network where the 'server' is a centralised (typically) higher performance system offering content or a service to one or more (typically) lower performance 'client(s)' who only consume the services and do not contribute any of their own resources. [1]

The images above show the peer-to-peer architecture on the left and the traditional client-server architecture on the right [2].

History

The internet or ARPANET as it was then known, was originally designed with a peer-to-peer architecture. The ARPANET or Advanced Defence Research Projects Agency Network was a project started in 1966 that laid the foundations for what would eventually become the internet. The goal of the ARPANET was to share computing resources around the United States, originally with the aim of providing redundancy for military computers in the event of a military base being destroyed. Theses goals lend themselves organically to the peer-to-peer architecture and the first few hosts on the ARPANET - UCLA, SRI, UCSB and the University of Utah were connected together as equal peers.

This early internet did not have much in the way of security as most of the early users of the internet were researchers who knew each other. Some of the first popular internet applications were Telnet (1969) [4] and FTP (1971) [5]. Both utilised the client-server architecture, but due to the lack of security and partitioning of the early internet, most of the usage for these tools was still symmetric with every host able to Telnet or FTP to other hosts, and most servers also acted as clients and vice versa.

Usenet, was first deployed in 1980 [6], and was one of the first applications to utilise a peer-to-peer architecture as we would understand it today. Originally, there were two hosts, one at the University of North Carolina and the other at Duke University. Users were able to read and post messages to a specific topic (or 'newsgroup') by connecting to their local host. Periodically, the hosts would use the Unix-to-Unix Copy Protocol (UUCP) to automatically synchronise the Usenet content directory. As the network grew, so did the number of topics and messages and a dedicated TCP/IP protocol called the Network News Transport Protocol (NNTP) was eventually developed to efficiently discover and exchange messages and topics. A new Usenet server joins the network by setting up a news exchange with at least one other new server. Today this is typically done by setting up an exchange with a large news server provided by an ISP. Periodically, the news from the ISP server is downloaded onto the new server and any new posts on the new server are sent to the ISP server. The content then propagates through the network via other servers contacting the ISP server. The massive volume of traffic on the Usenet network has made some control necessary, and today administrators can select a restricted set of newsgroups to support and store the content for limited period of time. Usenet is still in use today, but its trusting philosophy has limited its usefulness today making it vulnerable to spam and off-topic discussion.

Domain Name System (DNS) was an early example of a hybrid peer-to-peer network. In the early days of the internet, the way human readable domain names were mapped to an IP addresses was through a text file called hosts.txt that contained a series of domain name and IP address pairings, e.g. jbm.fyi would be paired with 3.250.195.229. Users would have to try to keep their hosts file up to date by frequently downloading the file off the internet. As the number of hosts on the internet grew, this quickly became infeasible. DNS, released in 1983 [7], established a hierarchical structure for domain resolution. Each domain has an authority, which holds the name server for every host on that domain. When a host wants to know the address for a particular domain, it queries the closest name server. If that name server does not know the answer, it queries a higher authority, and so on until the root name servers for the whole internet are queried. The root severs hold information about the Top Level Domains (TLD). TLDs are the last part of a domain name, such as .com. For example, for the domain example.jbm.fyi: the .fyi TLD would hold the address for the name server(s) responsible for jbm.fyi and this name server would hold the records associated with any subdomains at jbm.fyi such as example.jbm.fyi. Each level of the hierarchy caches the records it receives from more authoritative name servers to speed up the process for future queries. Every name server acts as both a client and a server and the network has been able to scale from the few thousand domains that existed in 1983, to the millions that exist today.

In the late 1990s, the number of internet users grew explosively. Whereas previously, the primary users of the internet were computer researchers and enthusiasts, the 90s saw millions of ordinary people adopting the internet. Many of these new users were not interested in publishing or creating content and wanted to consume content on the net similar to how they would read a newspaper or watch television. Typically, users had only a slow connection and home computers were not very powerful. Combined with the difficulty of setting up two-way communications, these factors lead to asymmetric network links and the adoption of the client-server model as the primary model for internet architecture. Service providers were able to provision powerful servers that could meet the needs of the less powerful clients who did not need to have a permanent IP addresses and could be sitting behind firewalls or NATs.

The internet was originally designed on principles of co-operation and efficiency among researchers and enthusiasts. The mass appeal of the internet meant that many of the participants no longer believed in these original principles and this led to a breakdown of co-operation. In 1994, the first major spam attack occurred. A small law firm were able to post an advert on nearly every Usenet site. This became known as the Canter and Seigel "green card spam" and attracted disgust from the internet community, but quickly became common place. The advert was not paid for by the law firm and instead the users effectively funded it in the form of computing power.

The rise of this uncooperative behaviour led to security concerns and serious changes in how the internet operated. By default, a machine that could access the internet could also be accessed by any other machine on the internet. Network administrators quickly realised that normal users couldn't be trusted to take the security precautions necessary for this scenario and began to deploy firewalls allowing users to contact external servers, but not allowing external servers to contact the internal network. At the same time, the limited number of IP addresses meant it became impractical for every host to have one permanent address and dynamic IP addressing became the norm. Under dynamic IP addressing, clients would be assigned an available IP address by the ISP which would change every time they connected. Network Address Translation (NAT) was also proposed as a solution to this problem. NAT effectively allows many users to share a single IP address by mapping a single public IP address onto multiple private internal IP addresses. NATs also act similarly to firewalls, by not allowing any communication with the external network without going through the NAT. This is how most home networks work: each client on the network is assigned an internal IP address such 192.168.0.2 and are able to communicate amongst themselves using this address. When a device wishes to contact an external address, they contact the NAT (which is now often referred to as the 'router') which makes the clients request on their behalf using the external IP address. When a response is received, the NAT maps it back to the internal IP address and forwards it to a client. Most ISPs now use a combination of NAT and dynamic IP addresses and many users are sitting behind many layers of NAT. These factors made the use of peer-to-peer networks significantly more challenging and lead to the decline of peer-to-peer networks in favour of the client-server model.

The era of modern peer-to-peer file sharing began in 1999 when Napster was introduced. Napster was a peer-to-peer file sharing program that enabled users to download files that were on other user's computers. Napster was primarily used for sharing MP3 files and at its peak had over 80 million users. The popularity of the software eventually led to legal difficulties with music artists suing for copyright infringement. Napster was a hybrid peer-to-peer network as it relied on a central indexing server (which eventually led to its downfall) but it paved the way for future peer-to-peer networking technologies.

[3]

Applications of Peer-to-Peer Technology

Today, peer-to-peer networks are employed in a wide variety of applications.

BitTorrent

BitTorrent, often referred to simply as torrent, is the most popular file sharing platform in use today. In 2019, the protocol was responsible for generating 2.24% of downstream and 27.58% of upstream internet traffic [8]. BitTorrent was first released in 2001 by Bram Cohen and was designed to share large files efficiently over lower bandwidth networks. Rather than downloading a file of a single server, BitTorrent allows users to join a "swarm" of peers who they can exchange data with. A complete copy of the full file is called a "seed" and a host distributing the seed is called a "seeder". The file being distributed is split into "pieces" and once a peer has received a piece, it becomes a source of that piece for other peers. Peers usually download multiple pieces non-sequentially and in parallel, and reassemble all the pieces into one file once the download is complete. Once a seeder has distributed all of the pieces of the original file to the swarm and even if no single member of the swarm has a complete copy, the seeder can disconnect from the network, and the peers will still be able to construct the full file by interacting with other members of the swarm. Once a peer has obtained a complete copy of the file, it can act as a seeder. In this way, the task of distributing the file is shared only by those who want to obtain a copy of it.

Each piece is protected by a cryptographic hash function in the torrent file to protect from accidental and malicious modifications. Torrent files are text files that contain a description and metadata of the file they pertain to and information about the associated tracker. Trackers are servers that help to facilitate the efficient transfer of files by keeping track of where file copies reside on peers. When a user wishes to download a file via torrent, they must first obtain a copy of the torrent file which instructs their torrent client which tracker to interrogate to discover peers. The protocol does not include any mechanism to index torrent files, and this is left to "indexes" who are websites that provide a searchable repository of torrent files.

The BitTorrent protocol, as described above is a hybrid peer-to-peer network due to the reliance on trackers and this introduces a centralised point of failure. However, this been alleviated with the introduction of "multitrackers" which enable a single torrent file to support multiple trackers and eliminated entirely with the introduction of "peer exchange" and "distributed trackers". Peer exchange or PEX allows peers to update each other directly about the state of the swarm without contacting a tracker but cannot be used to introduce a peer user to the swarm. Distributed trackers are used to introduce peers to a swarm and take the form of a distributed database built on top of a Distributed Hash Table (DHT). The only remaining aspect of BitTorrent that is centralised today is the use of torrent indexes to obtain torrent files. DHT databases can also be used to build an index of torrent files and is slowly replacing torrent indexes as the primary method for obtaining torrent files.

The BitTorrent protocol has been criticised for facilitating the transfer of illegal content such as copyrighted or illicit material. The fact that the BitTorrent protocol is entirely legal, and it is only the content that can fall foul of law, has not stopped many ISPs from blocking or throttling torrent traffic. As a result, many users take precautions such as the use of VPNs to prevent ISPs from detecting their torrent activity. The authorities frequently block torrent index and tracker server addresses and attempt to take legal action against their operators. As a result of this, operators try to stay anonymous. Torrent indexes are usually revived with a slightly different name after they have been blocked or moved to the dark web where they can only be accessed with special clients such as Tor. These factors motivated many of the efforts to further decentralise the protocol.

[9, 10, 11]

Blockchain

A blockchain is a peer-to-peer distributed database where every peer can guarantee that it has identical data to every other peer. Every transaction that occurs on the blockchain is executed and shared by every peer. Once a record has been accepted by the network, it is permanently, verifiably and immutably recorded on the blockchain. Blockchains were invented in 2008 by an unknown individual/group called Satoshi Nakamoto [2] as part of their digital currency project culminating in Bitcoin.

The fundamental principles of blockchain technology are:

- Decentralised - The blockchain should not rely on any single centralised authority.

- Transparent - All data and operations that occur on the blockchain should be visible and auditable to all users.

- Trustless - Users should not need to trust any authority or individual.

- Immutable - Once data is stored on the blockchain it should not be possible to erase it and all modifications are recorded forming an audit trail.

- Anonymous - Users should not need to reveal their real-world identities in order to participate.

- Open-source - Users should be able to verify the code running the blockchain. Anyone should be able to modify or build an application on top of the blockchain.

* Not all applications built on top of blockchain respect these principles

[12]

New records are added to the blockchain through the following process:

- Sender broadcasts new record to the network.

- Record is added to a pool of pending transactions.

- Verifiers select a number of records from the pool, validate them and group them into a block.

- Each verifier compete for their block to be accepted by the rest of the network. There are numerous mechanisms to settle the contest.

- Every node in the network uses a consensus algorithm to decide which block to confirm.

- Every node in the network adds the confirmed block to their chain.

[13]

Blockchains have 3 fundamental elements: blocks, nodes and verifiers.

Blocks

[14]

The blockchain is made up of an ever-growing number of sequential blocks, where each block is linked to the preceding block forming a chain. Each block contains batch of data and a header. The data varies based on the application for the blockchain, but the header consists of 2 hashes and a timestamp. The first hash is the hash of the previous block and the second is a hash of the current block.

[15]

Hashes are one-way cryptographic functions that take an input of an arbitrary size and produce an output of a fixed size:

- Given any input it should be easy to compute the output.

- Given any output it should be very difficult to compute the input.

- It should be very difficult to find two inputs that have the same output.

The chain can be verified by hashing the data inside the current block and ensuring this matches against the hash of the current block in the block header. Next, the data from the previous block is hashed and match against the hash of the previous block in the block header. This is repeated moving back along the chain until the root block is encountered, at which point the chain can be considered verified. Since no two inputs to a hash function can produce the same output, if any of the hashes do not match their expected values, the data within the block has been tampered with or corrupted.

[16]

Nodes

No single device should be able to control the whole blockchain and instead there are numerous machines or nodes who all maintain their own copy of the blockchain. There must be consensus among a majority of the nodes in order to update the blockchain. Once consensus is reached, a new block can be added to the chain.

It is possible to be user of the blockchain without being a "full node". In this case a user would obtain the most recent block from the longest available chain and verify it with a number of other nodes in the network. This is less secure and the user is not performing the verification themselves.

[16]

Verifiers

Verifiers have the job of selecting transactions from the transaction pool, grouping them into a block and propose this block as new block to the network. Before including a record in a block, the verifier must check that it is valid. Validity will depend on the exact application but could involve for example checking a sender has sufficient balance to make a transfer. Once the verifiers have selected blocks, they compete with each for their block to become accepted by the network. The two most popular mechanisms to settle the contest are Proof of Work (PoW) and Proof of Stake (PoS). Verifiers usually receive a financial reward if their block is accepted. For many blockchain systems, the same machines act as both nodes and verifiers.

[16]

Proof of Work (PoW)

Proof of Work was the original consensus contest proposed by Nakamoto. The process of computing PoW is called "mining" and participants are called "miners". The headers in PoW blockchains store an additional value called the "nonce". The job of miners is to adjust the value of the nonce so that the hash of the block header is less than a target value called the "difficulty target". The network adjusts the value of the difficulty target to maintain a constant rate. As hash functions are one-way, the only way to find the correct nonce value is brute force which is time-consuming. If any of the data in a block changes, the hashes in the block header would change as well and hence a new nonce value would need to be calculated. This principle secures the blockchain, as for a past black to be modified, an attacker would have to redo the PoW algorithm for all blocks after the modified block, and then have the final block accepted by the network. The probability of this happening continues to approach zero as the chain grows longer. Nakamoto described PoW as "One-CPU-One-Vote". The longest chain in the network represents the majority decision as it has the most CPU time invested into it.

[12, 17]

Proof of Stake (PoS)

PoW algorithms have been criticised for the massive quantities of CPU time (and electricity) wasted brute forcing the nonce, and the relatively small number of blocks that can be added to the chain per minute. PoS is an attempt to solve address these criticisms. In PoS, the probability of a verifier's block being accepted by the network is based on how big of a stake in the network they have. Under PoS two concepts protect the network:

- An attacker would need to obtain a large stake in the network in order to carry out an attack. This would be very expensive.

- In order to have enough of a stake to manipulate the network, the attacker would be damaging something they have a large (and expensive) stake in. This would devalue their stake and act as a dis-incentive.

[12]

Digital Currencies

Digital currencies, or cryptocurrencies were the original application for blockchain technology. In the years since, blockchain has found a wide variety of applications, but cryptocurrencies remain the most popular and valuable application. At the time of writing, the total market capitalization for the cryptocurrency market was over $2.5 trillion USD [18].

The most popular and first blockchain-based digital currency is Bitcoin, as the time of writing, the Bitcoin's market capitalization was over $1 trillion [18]. Bitcoin is a global currency and payment system that enables users to transfer value without the need for intermediaries such as banks and outside the control of central banks, currency authorities and governments. Each user generates a public key which is used as an address and maintains a private key which is used to secure access to their "wallet". Bitcoin is a PoW currency and adjusts the difficulty target such that one block is produced every 10 minutes. The miner who finds the correct nonce is rewarded with a number of bitcoins.

Smart Contracts

Smart contracts are another revolutionary application for blockchain technology. Smart contracts are computer programs that are able to automatically execute, control or document legal events according to the terms of a contract [19]. The earliest examples of smart contracts were vending machines. In 2013, Vitalik Buterin proposed the use of the blockchain as a decentralised global computer that could run a variety of applications, services of contracts [20].

Buterin created the Ethereum project which specified the Ethereum Virtual Machine (EVM) and a cryptocurrency called Ether which is used to pay for "gas". Gas is a unit that used to describe the amount of computation power required to execute a given operation on the EVM. Users specify how much Ether they want to offer per unit of gas and this is called "gas price". Ethereum uses PoW but the verifiers must also perform any necessary computation and update the state of the virtual machine which is recorded on-chain. Verifiers receive the gas price for each unit of gas they compute as a reward and hence are motivation to include transactions that have higher gas prices in their blocks. In practice, this means that users offering higher gas prices have their transactions processed first.

Smart contracts are programs written in EVM instructions recorded on the blockchain. There are compilers available for many popular programming languages that can compile down to EVM instructions. Once a contract has been "deployed" to the network, it is immutable and the EVM code can be inspected by anyone. Contracts are able to interact with other contracts and can hold a balance. There are now thousands of Decentralised Applications running on the Ethereum network ranging from simple savings contracts that allow a user to deposit funds to be return at a later date, to more complex applications such as online casinos. In fact, digital currencies can be implemented as smart contracts and this has enabled the Ethereum blockchain to become home to thousands of other digital currencies.

Whilst Ethereum remains the most popular smart contract platform, many rival chains are now available. The majority of these chains also implement the EVM specification and hence smart contracts designed for the Ethereum chain can be deployed with minimal modification. These rival chains aim to offer additional features, alternative consensus mechanisms such as PoS, or faster transaction times.

Applications in Smart Tourism

Smart tourism can be defined as ‘Smart tourism is the act of tourism agents utilising innovative technologies and practices to enhance resource management and sustainability, whilst increasing the businesses overall competitiveness’ [22]. Peer-to-peer networks can bring a number of benefits to the smart tourism sector.

Peer-to-peer networks offer the ability to form ad hoc networks for information exchange even in areas that lack network infrastructure. This could be used by tourists in remote or under developed destinations to communicate or locate attractions and services.

When abroad, many tourists need to obtain a supply of the local currency in order to participate in transactions. However, digital currencies could eliminate the need for a local currency supply. Service provides could accept digital currencies directly or exchanges could provide rapid conversion to a local currency (or digital representation). Many services such as ordering taxis or food are already provided via smart phone applications and adapting them to accept digital currency payments would be straightforward.

Blockchains could be used to help tourism providers such as travel agents, airlines, hotels, attractions and restaurants share data regarding tourists in a more intelligent, secure and privacy respecting way. This data could be used to enhance experience, by for example managing queue times, improve marketing and making travelling more convenient by ensuring that destinations like hotels already have the information they need.

Blockchains could also be used to eliminate travel documentation such as passports, visa and tickets entirely. These documents could instead be represented by Non-Fungible Tokens (NFTs) on the blockchain. As opposed to digital currency tokens, which are fungible, NFTs are tokens that are unique and not equivalent to any other token. They can be used to represent ownership of unique asset on the blockchain, such as a piece of art or a plane ticket.

Future

Today's society as a whole largely reflects the client-server model with large corporations such as Google and Amazon providing services to people. As a result of this, the internet is largely controlled by a small number of powerful corporations who provide the physical infrastructure, routers and servers. The cloud server market is particularly worrying where just three companies (Amazon, Google and Microsoft) control 60% [21]. This gives them a lot of control over what content appears on the internet, who gets the fastest connections and the ability to shut down servers as they please. The internet has been constructed in a top-down approach. Peer-to-peer networks offer the opportunity to rebuild the internet with a bottom up approach where users are more in control of the content and data they share.

Similarly in the financial industry, a relatively small number of banks control access to most of the population's assets. Many financial opportunities and markets are not available to smaller players. Central banks and governments are able to manipulate markets and the economy through regulation and monetary policy and can inflate their currencies at will. Digital currencies offer the ability to hold an asset that cannot be manipulated through governmental pressures and that do not require intermediates such as bank. Smart contracts are already beginning to offer many of the services traditionally offered by the financial industry which, in the realm of digital currencies are often referred to as "decentralised finance" or "defi". If these areas see mass adoption they could bring an end to government controlled fiat currencies and access to more opportunities for individual players.

The applications of smart contracts and indeed blockchain are not limited to the financial industry. There are already decentralised applications on the Ethereum platform with a variety of non-financial offerings such as games, casinos and cloud storage providers. Blockchain applications in a wide selection of industries are already being proposed. In the medical industry they can help hospitals and researchers share data whilst respecting privacy. For the energy industry, they can help improve market efficiency and provide better data and predictions. Privacy advocates propose a decentralised identity provider based on blockchain technology where the chain can vouch for who you are without releasing sensitive data to service providers. Blockchain is a young technology and these ideas are just the start, many of the blockchains finest applications may be beyond imagining for now.

Advances in modern wireless communication technology could give peers the ability to establish mesh peer-to-peer networks across wider areas without relying on traditional web infrastructure. This could be particularly useful for mobile devices such as smartphones or connected cars. Mass adoption of this type of technology could lead to a future in which there is a parallel global internet. This would be most useful if a standardised network stack similar to TCP/IP could be established for peer-to-peer networks. A common network standard would enable multiple peer-to-peer protocols and applications to use a single general purpose peer-to-peer network rather than a new network developing around a protocol as is common today.

Evaluation

Strengths

- Resilience - No single point of failure. The failure of a single (or fraction) of the peers in a P2P network will not bring the whole network down.

- Inexpensive - No need for dedicated servers and existing devices can connect to the network.

- Cost Reduction- As most peers will be maintained by their user and less is infrastructure is required, the amount of technical support staff can be reduced.

- Self-scaling - As more peers join the network and consume services, more peers are available to provide services.

- Decentralised - No central power or authority can control the network which makes censorship and regulation challenging or impossible. No single component to bottleneck the network.

- Ad-hoc - Does not rely on pre-existing infrastructure.

- Self-organising - P2P networks can form and grow without planning or co-ordination.

- Adaptability - New clients of any type can easily join the network.

- Transparency - P2P networks are more transparent then traditional networks and peers are aware of the local network topology.

- DDoS-proof - "Pure" P2P networks are almost entirely immune to Distributed Denial of Service (DDoS) attacks.

Weaknesses

- Security - P2P networks often enable other peers to utilise their hardware in some way, bringing new security challenges.

- Web is built for Client-Sever - Asymmetric connections and the topology of the internet are challenges for P2P networks. Most peers are at the internet edge but cannot communicate with each other without routing through some centralised entity.

- Interoperability - Most P2P networks only support a single specialised protocol. As a result, new application often need to build entirely new networks rather than being built on top of an existing network.

- Finding resources - It can be very difficult to locate resources on a P2P network without resorting to a centralised index.

- Lack of Control - The lack of a central authority means that it can be difficult to stop the network from participating in undesirable behaviour such as using up all the bandwidth or sharing illegal content.

- Lack of Planning - The formation of P2P networks is usually unplanned and ad-hoc meaning the topology, size, connections, stability, throughput and location of the network cannot be planned or predicted.

- Uncooperative behaviour - Users can undermine the cooperative nature of P2P networks by consuming a service without providing one, which can create a resource imbalance and present bottlenecks. Users who download files but do not remain connected long enough to seed are called "leechers" on the BitTorrent protocol.

Opportunities

- Coverage - P2P networks offer the ability to extend network coverage to areas that lack infrastructure.

- New Networks - The creation of a wide area P2P internet could bring great opportunities for technologies and applications.

- New Markets - P2P networks and particularly cryptocurrencies have created a variety of new markets.

- Censorship-resistant - P2P networks can be used to share censored information in restrictive regimes.

- Accessibility - P2P networks could provide the ability for users to connect without paying ISPs and using lower cost devices.

- Cost Cutting - P2P technologies such as digital currencies provide the opportunity to cut costs by cutting out intermediaries, brokers and middlemen.

- Individual Control - P2P networks are an opportunity to take some of the power that has been consolidated by large technology companies and governments back into the hands of the individual.

- Data - P2P technologies such as blockchain offer the ability to more efficiently and transparent manage data.

Threats

- Security - P2P network users may be outside the protection of security protections such as firewalls and may be more exposed to malicious actors which could discourage user adoption.

- Legal - Illegal activity on P2P networks could bring reputational damage and perhaps legal action against the protocol.

- Regulation - Regulatory action against some of the more revolutionary P2P ideas could reduce profitability, productivity and discourage user adoption.

- Uncooperative Traffic - A large influx of peers consuming resources without providing any could quickly overwhelm the network making it unproductive.

- Infrastructure - Today's web infrastructure is optimised towards the client-server model and may be unable to deal with the volume of bi-directional traffic mass adoption could bring.

- Centralisation - Reliance on centralised services or components can reduce the benefits of P2P networks.

- Resistant - Much of today's internet infrastructure is consolidated under a small number of private corporations who may be unwilling to allow P2P protocols to operate.

- Access - ISPs often block BitTorrent traffic and may be willing to block traffic from other P2P protocols in the future.

Take Advantage of Peer-to-Peer Networks

- Use P2P technology to remove brokers and intermediaries.

- Blockchain should be used when working with transparent, authoritative, permanent, traceable and verifiable records that many people need read and write access too.

- Organisations that want complete control or identifiable participants can still take advantage of blockchain by using private or permissioned blockchains.

- Critical distributed systems can avoid a single point of failure and take advantage of the resilience and decentralisation offered by P2P network.

- P2P networks are perfect when you can't trust central authorities such as governments or cloud providers

References

| [1] | R. Schollmeier, "A definition of peer-to-peer networking for the classification of peer-to-peer architectures and applications," Proceedings First International Conference on Peer-to-Peer Computing, 2001, pp. 101-102. |

| [2] | Wikipedia, "Peer-to-Peer", accessed on 15/11/2021, https://en.wikipedia.org/wiki/Peer-to-peer. |

| [3] | Nelson Minar & Marc Hedlund, "Chapter 1. A Network of Peers: Peer-to-Peer Models Through the History of the Internet", Peer-to-Peer by Andy Oram, Chapter 1, O'Reilly Media, Inc., 2001. |

| [4] | Wikipedia, "Telnet", accessed on 15/11/2021, https://en.wikipedia.org/wiki/Telnet. |

| [5] | Wikipedia, "File Transfer Protocol", accessed on 15/11/2021, https://en.wikipedia.org/wiki/File_Transfer_Protocol. |

| [6] | Wikipedia, "Usenet", accessed on 15/11/2021, https://en.wikipedia.org/wiki/Usenet. |

| [7] | Wikipedia "DNS", accessed on 15/11/2021, https://en.wikipedia.org/wiki/Domain_Name_System. |

| [8] | F. Marozzo, D. Talia, P. Trunfio, "A sleep-and-wake technique for reducing energy consumption in BitTorrent networks", Concurrency and Computation: Practice and Experience Volume 32, Issue 14, 2020. |

| [9] | Wikipedia, "BitTorrent", accessed on 16/11/2021, https://en.wikipedia.org/wiki/BitTorrent. |

| [10] | Wikipedia, "BitTorrent Tracker", accessed on 16/11/2021, https://en.wikipedia.org/wiki/BitTorrent_tracker. |

| [11] | Wikipedia, "Peer Exchange", accessed on 16/11/2021, https://en.wikipedia.org/wiki/Peer_exchange. |

| [12] | I Lin & T Liao, “A survey of Blockchain Security Lessons and Challenges”, 2017. |

| [13] | IP Specialist, “How Blockchain Technology Works”, accessed on 16/11/2021, https://medium.com/@ipspecialist/how-blockchaintechnology-works-e6109c033034, 2019. |

| [14] | C. Cornelius, Agbo, H. Qusay, J. Mahmoud, M. Eklund, “Blockchain Technology in Healthcare: A Systematic Review”, 2019. |

| [15] | K. Rosenbaum, “Grokking Bitcoin”, 2019. |

| [16] | D. Yaga, P. Mell, N. Roby, K. Scarfone “Blockchain Technology Overview”, 2019. |

| [17] | Satoshi Nakamoto “Bitcoin: A Peer-to-Peer Electonic Cash System", 2008. |

| [18] |

Coinmarketcap, accesed on 16/11/2021, https://coinmarketcap.com/. |

| [19] | S. Wang, Y. Yuan, X. Wang, J. Li, R. Qin, F. Wang, “An Overview of Smart Contract: Architecture, Applications and Future Trends”, 2018. |

| [20] | Vitalik Buterin, “Ethereum Whitepaper”, 2013. |

| [21] | Statista, "Cloud infrastructure services vendor market share worldwide from 4th quarter 2017 to 3rd quarter 2021", accessed on 17/11/2021, https://www.statista.com/statistics/967365/worldwide-cloud-infrastructure-services-market-share-vendor/. |

| [22] | H. Stainton, "Smart tourism explained: What, why and where", accessed on 17/11/2021, https://tourismteacher.com/smart-tourism/, 2020. |