Extending Nand2Tetris

What follows is my 2022 St Andrew's dissertation on applying a number of modern CPU optimization techniques to the simple 16-bit "Hack" platform described Noam Nisan and Shimon Schocken in their book "The Elements of Computing Systems" (Nand2Tetris). The book takes students on a journey of discovery, first implementing fundamental logic gates from NAND gates, then constructing a simple CPU from fundamental logic gates, and finally covering the full software stack from assembler to compiler to operating system. I highly recommend the book to anyone who wants to enhance their computer science knowledge from first principles.

PDF version here.

Abstract

Modern CPUs are extremely complicated devices making use of a variety of clever optimisation techniques. This project aims to provide an understanding of some of these optimisation techniques by implementing them on a simple computer architecture. The hardware platform Hack presented by Noam Nisan and Shimon Schocken in their 2005 textbook “The elements of computing systems” (also known as Nand2Tetris) was selected. After providing an overview of Hack and of 5 optimisations: memory caching, pipelining, pipeline forwarding, branch target prediction and branch outcome prediction, a simulator for the platform is developed. The simulator provides an elegant mechanism for developing CPU optimisations and is used to collect data so that the performance impact of each of the optimisations can be explored and compared. Data from 20,000 runs of the simulator is analysed and presented graphically. The contribution of this project is to compare and contrast some of the most important modern CPU optimisations.

Dedication

For Spike, who spent many an hour watching me work on this project and others. You will be missed.

Acknowledgements

Many thanks to Noam Nisan and Shimon Schocken for their excellent book, I found it hugely educational and interesting and it has changed my entire view of computers. Thanks to Shimon in particular for his email providing advice and encouragement regarding this project. I have tried to complete the book many times since I first acquired it over 10 years ago, eventually completing it after being inspired by Tom Spink’s Computer Architecture course at University of St Andrews. Many thanks to Tom for his outstanding course and all of his support, advice and expertise freely given during this project. As always, many thanks to my wonderful girlfriend and family for all their support and proof reading.

Introduction

Modern CPU designers rely on a range of clever optimisation techniques to improve the performance of their chips. This inclusion of so many optimisations on an already complex device makes understanding modern CPUs and CPU optimisations a challenge. This project aims to present a simple computer architecture and then implement some of the most important modern CPU optimisations on the simple platform. Once this has been done, the performance impact of each of the optimisations can be examined and compared.

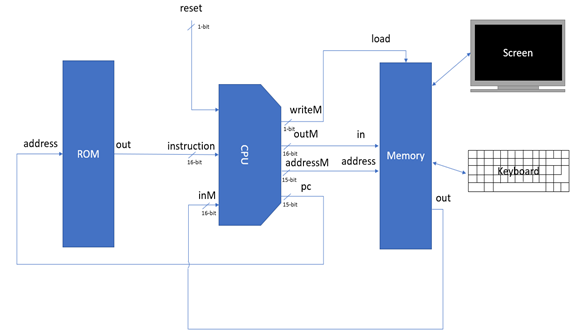

The platform used in the project is called Hack and is a simple 16-bit Harvard architecture. Hack is simple enough to be built and understood by students in a matter of weeks, but complex enough to demonstrate the principles of modern computing systems. The optimisations that will be implemented on the platform are: memory caching, pipelining, pipeline forwarding and branch prediction.

As part of the project, a simulator for the platform will be developed. This simulator will provide an elegant mechanism for optimising various areas of the CPU. The simulator will provide a means to enable and disable each optimisation and set any related parameters. The simulator will also gather a variety of performance statistics regarding each run.

The rest of this report begins with a context survey discussing background information related to the project such as a detailed overview of the Hack platform, discussions on a number of optimisation techniques, benchmarking and cycles per instruction. This is followed by a detailed description of the simulator platform. Next, 15 benchmarks are defined to test the platform. After this, a more detailed look at each of the optimisations explored by this project is taken. This is followed by a deep dive into the results gathered by the project, explored through the use of data visualisation. An insightful evaluation of this data is provided, before some ideas for future work are presented and the report is concluded. This document includes 5 appendices. Appendix A provides links to the source code referred to throughout this report, appendices B and C provide a more detailed exploration of the hardware and software implementations of the Hack platform respectively, appendix D takes a brief look at some possible software optimisations and finally appendix E deals with the ethics of this project.

Context Survey

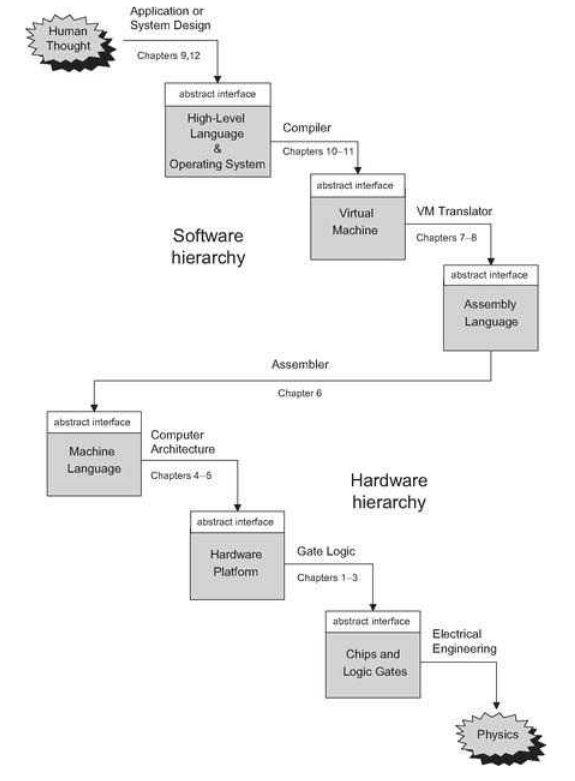

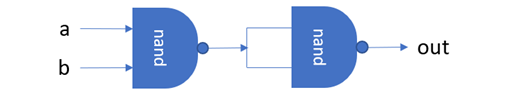

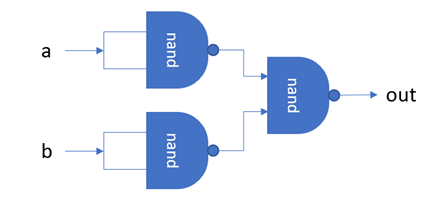

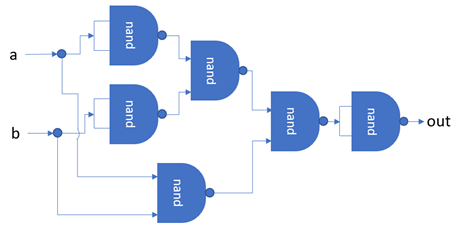

The Elements of Computing Systems

The Elements of Computing Systems [1] is a 2005 textbook written by Noam Nisan and Shimon Schocken. The authors assert that the complexity of modern computers and the segmented, specialised approach of a modern computer science education, deprive the student of a bigger picture understanding of how all of the hardware and software components of a computer system fit together. The authors aim to remedy this by providing students with an opportunity to build a computing system from first principles by gradually building a hardware platform and software hierarchy, as illustrated by Figure 2.

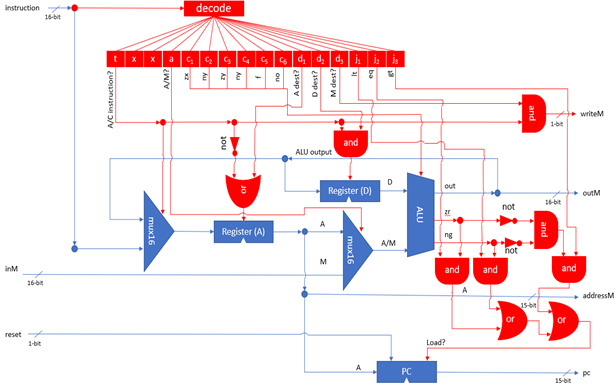

The platform constructed during the book is called Hack. Hack is a simple 16-bit Harvard architecture that can be built from elementary NAND gates and Data Flip-Flops. The instruction set is extremely simple with a focus on hardware simplicity. Programs are supplied to the computer in the form of replaceable ROM chips, similar to some cartridge-based games consoles. Despite this, the computer is powerful and building it is an extremely informative journey.

For the rest of this report, “The Elements of Computing Systems” will be referred to simply as “the book”.

Hack Architecture

Hack is a simple 16-bit Harvard architecture designed to be simple enough to be built and understood by students in a few weeks, but complex enough to demonstrate the inner workings of modern computer systems.

The platform is equipped with 3 physical registers and 1 "virtual" register. Memory is divided into program memory (ROM) and data memory (RAM). ROM can be read but not modified during runtime whilst the system has full access to RAM. Both ROM and RAM are addressed via two separate 15-bit address buses, meaning there are 32,678 possible locations in each unit. All 32,678 are available for ROM, giving a maximum program size of 32,678 instructions (although the simulator includes a mode enabling significantly more instructions). However, only 24,575 of these locations are available in RAM. Every memory location in both ROM and RAM, as well as each of the registers, are 16-bit two's compliment binary numbers.

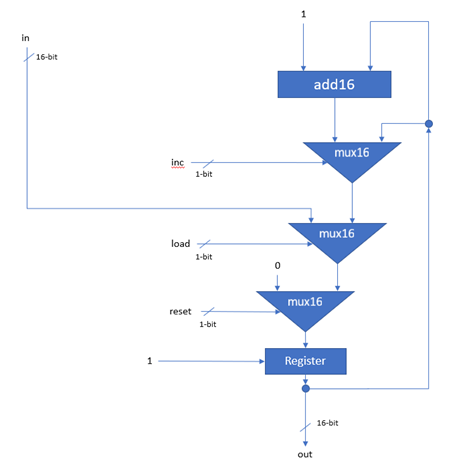

The first of the 3 physical registers is a special register called the Program Counter (PC). The PC stores the address of the next instruction to be executed by the system. When execution of a new instruction begins, the instruction at ROM[PC] is fetched and the program counter is incremented by one. Programmers cannot directly manipulate the PC register, but it can be reset to 0 by asserting the CPUs reset pin, or indirectly manipulated through the use of jump commands.

The remaining physical registers are called A and D. The D register is a general-purpose data register, whilst the A register can be used as either a general-purpose data register, or as an address register. The M register is a "virtual" register that always refers to the memory location RAM[A]. Programmers can manipulate arbitrary memory locations by first setting the A register to the desired address and then reading or writing to the M register.

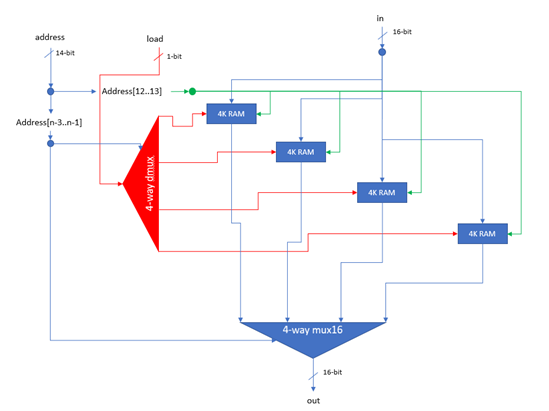

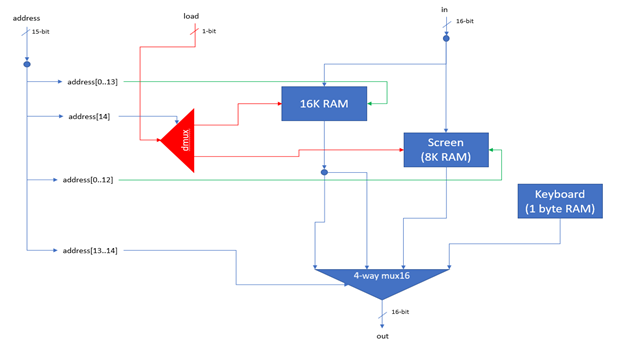

The RAM is divided up into two sections. The first section spans address 0 – 16,383, making it 16KB in size. This section is general purpose RAM and its exact usage is defined by the software implementation. The second section spans address 16,384 – 24,576 (8KB + 1 byte) and consists of two memory maps. The Hack platform is equipped with a 256 (height) by 512 (width) monochrome display. This screen is represented by the first memory map, which is 8KB in size and spans addresses 16,384 – 24,575. Each of these addresses represents a 16-pixel row, where a pixel can be illuminated by asserting the corresponding bit within the memory location. The pixel at row r (from the top) and column c (from the left) is represented by the c%16 bit of the word found at Screen[r*32+c/16]. The final address, 24,576, is a memory map representing the platform's keyboard. When a key is pressed, the corresponding key code is written to this memory location.

The instructions for the Hack platform are divided into two types: A-instructions and C-instructions. When written in assembly notation, A-instructions take the form:

@constant

Where constant is a positive integer. A-instructions are used to load constants directly into the A register. C-instructions take the form:

dest=comp;jump

As can be seen from the above, each C-instruction contains the fields dest, comp and jump. The dest and jump fields are optional, whilst the comp field is mandatory. This means that a C-instruction could also take the form:

dest=comp

Or:

comp;jump

Or even:

comp

Although this would not achieve anything and would be equivalent to a NOP (no operation).

The comp field instructs the ALU to compute a value which can be any of the mnemonics shown in Table 1, where !, | and & represent the logical NOT, OR and AND operators respectively.

Table 1 Assembly mnemonics for the comp field.

0 |

D |

!D |

-A |

D-1 |

D-A |

D|A |

-M |

D+M |

D&M |

1 |

A |

!A |

D+1 |

A-1 |

A-D |

M |

M+1 |

D-M |

D|M |

-1 |

!D |

-D |

A+1 |

D+A |

D&A |

!M |

M-1 |

M-D |

|

The dest field instructs the CPU where to store the output of the ALU. This field is optional and can be left blank, in which case the output will not be stored anywhere. If it is set, it can be any one or combination of the A, D and M registers.

The jump field instructs the CPU which instruction to execute next. This field is optional and can be left blank, in which case the CPU proceeds the next instruction as usual. If the field is set, it can hold one of 7 values shown in Table 2.

Table 2 Assembly jump mnemonic along with effect.

Assembly mnemonic |

Effect |

JMP |

Jump to ROM[A] unconditionally |

JGT |

Jump to ROM[A] if out > 0 |

JEQ |

Jump to ROM[A] if out == 0 |

JGE |

Jump to ROM[A] if out >= 0 |

JLT |

Jump to ROM[A] if out < 0 |

JNE |

Jump to ROM[A] if out != 0 |

JLE |

Jump to ROM[A] if out <= 0 |

*Where out refers to the output of the ALU.

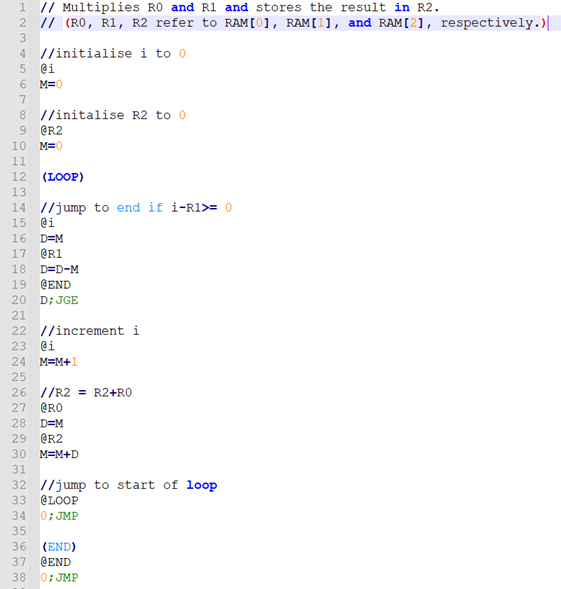

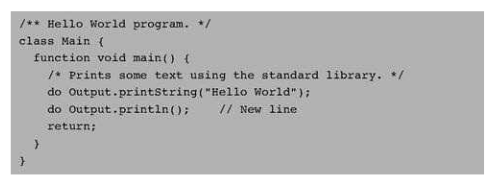

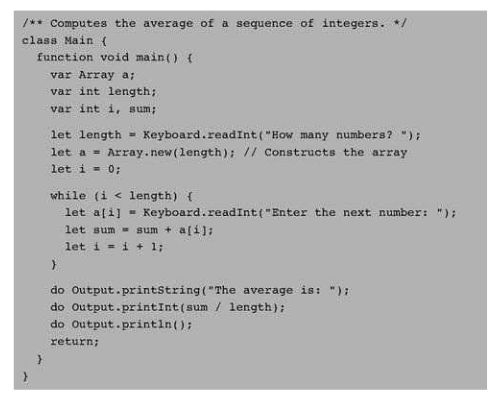

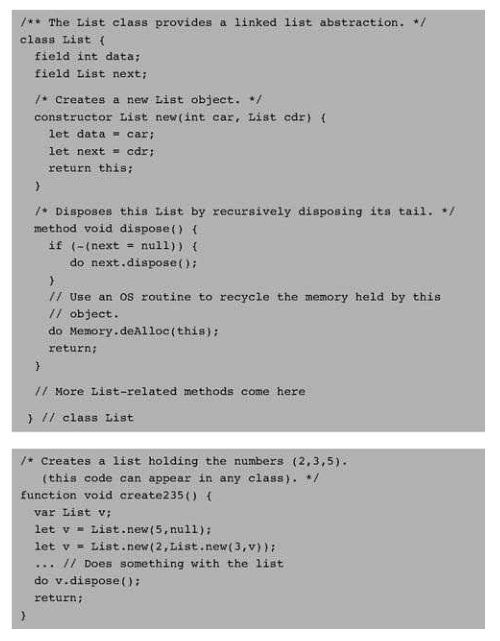

The software hierarchy of the Hack platform consists of five components: an assembler, a Virtual Machine (VM) translator, a high-level language compiler, an operating system and finally a program. The assembler, VM translator and compiler are all written in the Rust programming language, whilst the operating system and programs are written in the Jack programming language.

The assembler performs the job of translating the assembly language described above into binary machine code that the Hack platform can run directly. This is a trivial task, as each of the fields in a C-instruction have one-to-one mappings to binary, whilst A-instructions simply involve expressing the constant to be loaded as a 15-bit binary number. The task of the assembler is made slightly less trivial due to its support for symbols. The Hack assembly language supports two types of symbols: labels and variables. Labels are used to refer to another part of the program and are translated to program memory (ROM) addresses. The assembler relieves the programmer of the burden of manually assigning and keeping track of which variables refer to which memory locations by supporting variable symbols.

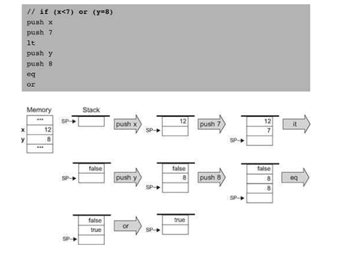

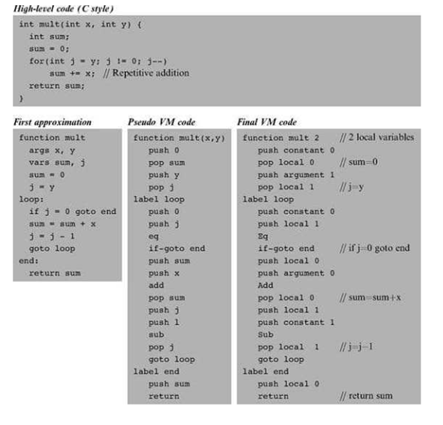

Whilst it is possible to compile directly from a high-level language (such as Jack) to assembly, today it is more popular to first translate the high-level language into an intermediate language, then later translate the intermediate language to assembly. This approach enables abstraction, reducing the amount of work the compiler must do and also improves portability. The Hack platform specifies a stack-based Virtual Machine (VM) that runs an intermediate language referred to as VM language/code. The stack-based nature of the VM implementation provides an intuitive mechanism for function calling and elegantly handles most of the memory management needs of the system. Some VM implementations, such as the Java VM, act as an interpreter/runtime for the VM code. This differs to the approach taken by this project as the VM translator program has the job of translating VM code into assembly code that exhibits the same behaviour and the VM code simply acts as an intermediate representation.

The compiler used in this project translates programs written in Jack into programs that can run on the VM implementation described above. The Jack programming language is a simplistic, weakly-typed, object-oriented, C-like language. Both the operating system and the programs that run on the Hack platform are typically written in Jack, although there’s no reason a compiler for another programming language couldn’t be implemented for the Hack platform. All of the benchmarks used as part of this project have been written in Jack.

Modern Operating Systems (OS) are typically extremely complicated programs that must offer a wide variety of services to programs running on top of them, whilst also managing the system’s resources and enabling multiple programs (and possibly users) to run simultaneously and without interfering with each other. Luckily the OS utilised in this project does not need to be as complex, due in no small part to the fact that Hack platform is single-user and single-tasking. Our OS does not even need to be capable of loading programs as all programs are supplied in the form of replaceable ROM cartridges. In fact, the OS utilised by this project is more of a standard library for the Jack programming language then it is an OS. This is the only part of the project that has not been implemented (yet!) by the author. The OS used in this project is called Jack OS and is supplied with the book.

For more information on the hardware or software platform, please see Appendix B: Hardware Implementation or see Appendix C: Software Implementation respectively.

Optimisations

Hardware designers and CPU architects are in a constant race to build the fastest possible processors. Whilst performance improvements can come from increasing clock speeds and reducing the size of the underlying transistors, most performance improvements come from efficient design and clever optimisations. Hardware manufacturers must compete to build the highest performance chips at the lowest possible price and are therefore constantly looking for new ways to optimise their designs. General purpose CPUs are exposed to a significant and varied volume of code, some of which is badly written, which makes optimisations at a hardware level even more important. This is partly because the hardware designers do not know ahead of time exactly what the CPU will be used for and must be reasonably confident that the CPU will be able to handle its workload efficiently. Even when the code being executed is well written, there is only so much that can be done through software optimisations. Table 3 below summaries a number of optimisation techniques employed in modern CPUs.

Table 3 CPU optimisations summary.

Optimisation |

Description |

Resource(s) |

Memory Caches |

Computers spend a lot of time waiting on memory and faster memory is more expensive than slower memory. One possible solution is to arrange memory into hierarchies with the entire memory space available in largest, slowest memory at the bottom and incrementally faster and smaller memory stacked on top of it, each containing a subset of the memory below it. |

[2], [3], [11] |

Pipelining |

Pipelining is an optimisation technique where instructions are split into a number of smaller/simpler stages. Most modern CPUs implement some variant of a pipeline. The “classic RISC pipeline” splits each instruction into 5 stages: Instruction Fetch, Instruction Decode, Execution, Memory Access, Register Write Back. The use of this pipeline enables Instruction Level Parallelism (ILP) by performing each of these stages simultaneously on different instructions; i.e. whilst Instructioni is in the WB-stage, Instructioni-1 is in the MEM-stage, Instructioni-2 is in the EX-stage and so on. This can offer performance improvements as it enables a degree of instruction level parallelism and provides a foundation for further optimisations such as branch prediction and pipeline forwarding. |

[5], [12] |

Branch Prediction |

Whenever a conditional branch statement is encountered, the CPU must determine the outcome of the branch before execution can continue. This can waste valuable cycles. Similarly, it may waste time determining the target of the branch. Both of these challenges can be alleviated through the use of prediction. These are actually two different tasks: branch outcome prediction and branch target prediction. |

[4], [13], [14], [15] |

Out-of-order execution |

Dynamic scheduling allows the CPU to rearrange the order of instructions to reduce the amount of time the CPU spends stalled, whilst maintaining the original data flow ordering. Tomasulo’s Algorithm introduces the concept of register renaming and tracks when operands become available. Reservation Stations (RS) are used to facilitate the register renaming. RSs store the instruction, operands as they become available and the ID of other RSs which will provide the operand values (if applicable). Register specifiers are renamed within the RS to reduce data hazards. Instructions that store to registers are executed in program order to maintain data flow. Tomasulo’s Algorithm splits an instruction into three stages:

|

[6], [16] |

Speculative Execution |

Speculative execution is a technique where the CPU executes instructions that may not be needed. This situation typically arises when a CPU does not yet know the outcome of a branch and begins speculatively executing a predicted execution path. The results of the execution are only committed if the prediction was correct. Committed in this case means allowing an instruction to update the register file, this should only happen when the instruction is no longer speculative. This requires an additional piece of hardware called the reorder buffer that holds the results of instructions between execution completion and commit. If Tomasulo’s Algorithm is also in use, then the source of operands is now the reorder buffer instead of functional units. The reorder buffer enables instructions to be completed out-of-order, but makes the results visible in-order. |

[7] |

SIMD |

Single Instruction Multiple Data (SIMD) is an example of data-level parallelism. SIMD makes use of vector registers and vector functional units to make it possible to execute the same instruction on multiple data items simultaneously. Vector functional units have multiple lanes which means they can perform many scalar operations simultaneously, one per lane. The number of lanes available dictates the degree of parallelism available. |

[8] |

Multiple Cores |

Multicore processors offer the ability to execute multiple threads of execution simultaneously by physically duplicating the CPU. This obviously enables the CPU to run more than one program simultaneously, however performance improvements within the same program are only possible if the program is aware of and takes advantage of the additional available core(s). |

[9], [10] |

Benchmarks

Benchmarks are used to enable comparison between CPUs by scoring their performance on a standardized series of tasks [20]. Benchmarks are essential to evaluating the performance improvement derived by implementing an optimisation. Benchmarks can be broadly divided into two categories: synthetic and real-world. Synthetic benchmarks require the CPU to complete a series of tasks that simulate a high-stress workload. Examples of synthetic benchmarks include:

- PassMark: runs heavy mathematical tasks that simulate workloads such as compression, encryption and physics simulations

- 3DMark: measures the system ability to handle 3D graphics workloads

- PCMark: scores the system on how well it can deal with business workflows

Real-world benchmarks are carried out by giving real programs a heavy workload and then measuring the amount of time taken to complete the task. Popular programs to run real-world benchmarks on include:

- 7-Zip: measure the CPU’s compression and decompression performance

- Blender: measure the CPU’s 3D rendering speeds

- Handbrake: measure the CPU’s video encoding speed

Synthetic benchmarks typically nest a code snippet within a loop. Timing libraries are used to measure the amount of time elapsed per iteration of the loop. The loop is run many times and elapsed time averaged in order to control for outliers [19].

In addition to dividing benchmarks into synthetic and real-world categories, benchmarks can also be divided into micro and macro benchmarks. All of the benchmarking techniques discussed so far have been macro benchmarks. Macro benchmarks aim to test the performance of the entire CPU. In contrast, micro benchmarks aim to test only a single component of the CPU. This can be useful when evaluating the effect of parameters or strategies within a single component of the CPU.

SPEC CPU 2017

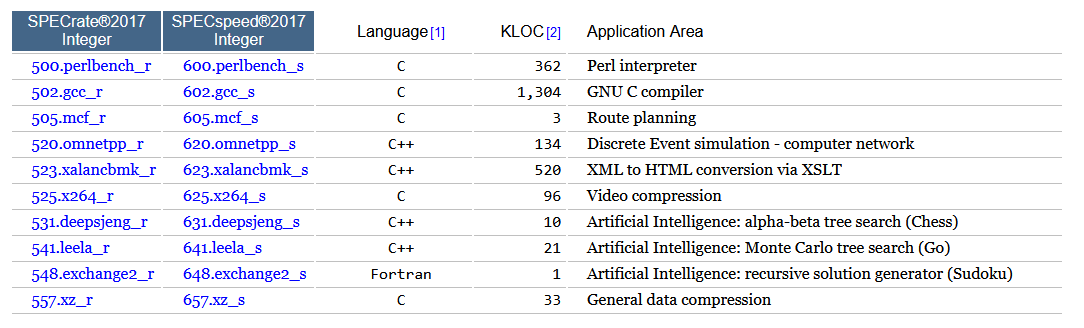

The Standard Performance Evaluation Corporation (SPEC) produce a number of benchmarking tools targeting a number of different computing domains [21]. Their CPU package includes 43 benchmarks based on real-world applications (such as the GCC compiler) and organised into 4 suites. The suites are SPECspeed 2017 Integer, SPECspeed 2017 Floating Point, SPECrate 2017 Integer and SPECrate 2017 Floating Point. The SPECspeed suites compare the time taken for a computer to complete single tasks, whilst the SPECrate suites measure throughput per unit of time. The Hack platform is not equipped with floating point units and any support for fractional values would have to be implemented in software, therefore only the integer benchmarks are of interest for this project. The programs that make up the SPEC integer suites are shown in Figure 3. More information can be found on the SPEC website [21] and the source code for each benchmark can be obtained by purchasing a SPEC licence. Note that KLOC refers to the number of lines of source code divided by 1000.

SimBench

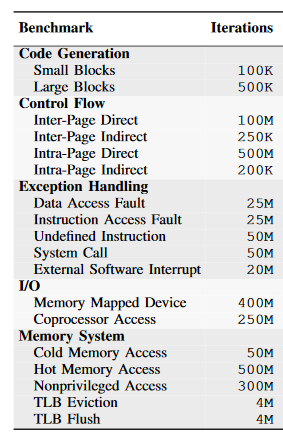

SimBench is a benchmark suite specifically designed to test the performance of CPU simulators [17]. SimBench was proposed in 2017 by Wagstaff et al., after noting that more traditional benchmarking suites such as SPEC are not able to identify specific bottlenecks in a computer architecture simulator. 18 benchmarks are included in the suite and these benchmarks are organised into five categories: Code generation, Control Flow, Exception Handling, I/O and Memory System. Figure 4 shows the benchmarks making up SimBench. Wagstaff et al. includes a detailed description of each of the benchmarks and the source code is available fromhttps://github.com/gensim-project/simbench.

Cycles per Instruction

Every instruction within a CPU’s instruction set will require a certain number of cycles to complete. Different instructions may take a different number of cycles to complete. For example, an add instruction may take 2 cycles whilst a divide instruction could take 20 cycles to complete. Memory accesses typically take the most cycles to complete. The number of cycles it takes to complete a given instruction is called Cycles per Instruction (CPI). Average CPI (across all instructions) is often used as a performance metric as it describes the average number of cycles required to complete an instruction. Since a cycle is effectively the CPUs base time unit, average CPI gives a good indication of what latency can be expected for an instruction.

Table 4 shows the CPI for a number of common operations for the Intel 8086, Intel 10th Generation Core and AMD Ryzen 3700. The timings for the Intel 8086 are taken from Liu et al. [22] whilst the timings for Intel 10th Generation Core and AMD Ryzen 3700 are taken from A. Fog [23]. The operations most relevant to the Hack platform have been selected. For purposes of simplicity the following assumptions have been made:

- Operations can only be performed on register operands.

- Memory can only be accessed by explicitly loading a value into a register from memory and explicitly saving a value from a register into memory (using MOV).

- If an instruction has multiple variants for different bit lengths, the native bit length will be used. I.e., the DEC operation on the intel 8086 has an 8-bit and 16-bit variant, as the 8086 is a 16-bit processor, the 16-bit variant will be used.

- Where indirect and direct addressing modes are available, direct addressing has been used.

Some of the values in the table are ranges, this is because the number of cycles required to complete an instruction may be dependent on the architectural state of the system or the operands supplied to the instruction. Factors may include whether a prediction was correct, state of the memory cache or size of the operands.

Table 4 Comparison of CPI for a number of instructions across 3 different CPUs.

Instruction |

Intel 8086 |

Intel 10th Gen. Core |

AMD Ryzen 3700 |

NEG |

3 |

1 |

1 |

DEC, INC |

2 |

1 |

1 |

ADD, SUB |

3 |

1 |

1 |

CMP |

3 |

1 |

1 |

MUL (unsigned) |

118-133 |

3 |

3 |

IMUL (signed) |

128-154 |

3 |

3 |

DIV (unsigned) |

144-162 |

15 |

13-44 |

IDIV (signed) |

165-184 |

15 |

13-44 |

NOT |

3 |

1 |

1 |

AND, OR, XOR |

3 |

1 |

1 |

JMP |

15 |

n/a |

n/a |

Conditional Jump (Taken) |

16 |

n/a |

n/a |

Conditional Jump (Not Taken) |

4 |

n/a |

n/a |

MOV (register -> register) |

2 |

0-1 |

0 |

MOV (register -> memory) |

15 |

2 |

0-3 |

MOV (memory -> register) |

14 |

3 |

0-4 |

Simulator

The simulator is the centrepiece of this project as it enables a variety of optimisations to be experimented with without requiring the physical construction of a new computing system. The simulator developed in this project was programmed in the Rust programming language and development involved iterating through 3 generations.

First-Generation Simulator

The first attempt at a simulator operated on unassembled Hack assembly programs instead of directly on binaries. This meant that it had to also carry out some of the functions of an assembler, such as maintaining a symbol table. This also meant that the simulator was carrying out a large number of string comparisons.

The first-generation simulator emulated exactly 1 instruction per cycle. The screen and keyboard peripherals were simulated using the Piston [24] rust library and this was done in the same thread as the rest of the simulator. This resulted in a trade-off between screen/keyboard updates per second and the speed of the rest of the simulator. At 15 updates per second, the simulator was able to achieve a speed of 10,000 instructions per second.

Second-Generation Simulator

The second-generation simulator looked to address a number of shortcomings with the first-generation simulator. Firstly, it operated directly on assembled binaries, which eliminated the requirement for assembler functions such as the symbol table and also removed the need for any string comparisons, significantly reducing overhead. The use of Piston for screen and keyboard simulation was replaced by the Rust bindings for the SDL2 library [25]. More significantly, the screen and keyboard peripheral simulation was moved into a separate thread, meaning that the updates per second for these devices was independent of the instruction rate for the rest of the simulation. These improvements enabled the simulator to achieve a significantly faster simulated clock speed of 5 million cycles per second at 144 peripheral updates per second.

During the development of the first-generation simulator, it was noticed that many of the programs provided by the book and once built using the toolchain (described in Appendix C: Software Implementation) were too large to fit into the 32K ROM chip. In order to combat this problem, a 32-bit mode was added to the second-generation simulator. Whilst attempts will be made later in the project to reduce the size of compiled binaries (Appendix D: Software optimisations), it would be a fairly simple undertaking to convert the Hack platform to 32-bits, for the most part simply involving replacing 16-bit registers with 32-bit registers and increasing the bus widths to 32-bits. A 32-bit Hack platform also has the benefit of having more unused bits in its C-instruction which could enable the addition of new instructions later in the project.

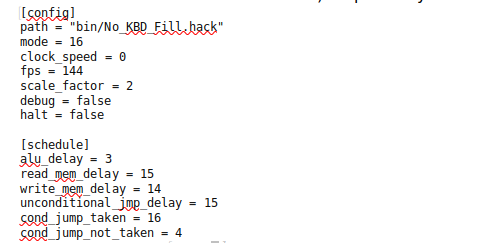

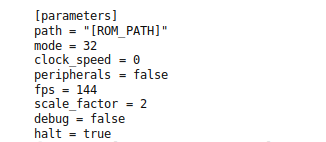

Another important improvement in the second-generation simulator was the addition of simulated cycle delays for various operations such as imposing a 3-cycle delay for the ALU to compute an output. These delays, along with a number of other parameters, could be configured in a file such as the one shown in Figure 5. The parameters in the config section of file enable the user to specify the path to the program ROM they wish to run, specify 16- or 32-bit mode, specify a target clock speed (0 for unlimited), specify the number of frames/updates per second, specify a scale factor for the screen, turn debug mode on or off (which results in debug messages being output to the display) and turn halt detection on or off (which detects once the OS function halt is called and terminates the simulation) respectively.

Third-Generation Simulator

The third-generation simulator is very similar to the second-generation simulator in terms of performance and peripheral simulation. The key improvement for the third-generation simulator is splitting the simulated CPU up into 8 modules:

- Control Unit: Responsible for scheduling instructions and conducting the remaining modules

- A-Instruction Unit: Responsible for loading the constant from an A-Instruction

- Memory Unit: Responsible for reads and writes to main memory

- Operand Unit: Responsible for retrieving the x and y operands for the comp field of a C-instruction

- Arithmetic Logic Unit: Responsible for executing the comp field of a C-instruction

- Jump Unit: Responsible for executing the jump field of a C-instruction

- Write Back Unit: Responsible for saving values into the A, D and M registers and carrying out the dest field of a C-instruction.

- Decode Unit: Responsible for decoding instructions prior to executing them.

Each module is represented by a trait (similar to an interface in other programming languages) which must be replaced by a concrete implementation at runtime. This enables new optimisations to be implemented simply by writing a trait implementation for the relevant module. For example, when implementing a memory cache, only the Memory Unit would need to be rewritten, rather than producing a completely new simulator as previous generations of the simulator would require.

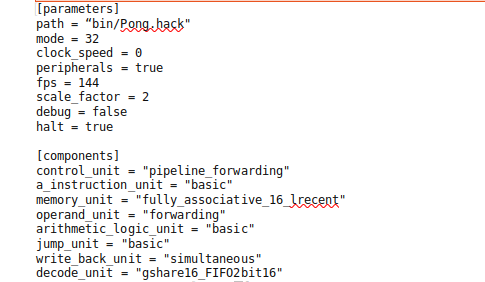

The third-generation simulator also takes a configuration file to specify the various parameters of simulation. The first section of the configuration file is similar to the second-generation configuration file, only the section has been renamed parameters and there is an additional parameter, peripherals, which can be used to turn the peripherals on or off. Instead of specifying a schedule of delays as in the second-generation simulator, the third-generation simulator allows users to specify which implementation for each of the 8 modules to use. An example of a third-generation configuration file is shown in Figure 6.

Benchmarks

The performance of the base system and various configurations of optimisations will be evaluated across a suite of benchmarks. The benchmark suite includes two “real-word” macro benchmarks taken from the book and described in detail below. In addition to this, 13 micro “synthetic” benchmarks were developed as part of this project and are described in Table 5.

Seven

Seven is one of the test programs supplied by the book. It is written in Jack and evaluates a simple expression and then displays the answer on the screen. The program makes use of the full software stack including some of the operating system routines. The assembled binary contains 41809 instructions. Along with testing the operating system boot procedure and a few of the standard library functions, the Seven program tests some of the basic features of the Jack programming language.

Pong

During initial testing of the simulators and to gain an idea of the improvement achieved by implementing various optimisations, the Pong program supplied by the book was used. Although this is an interactive game, the game will always end after the same number of cycles as long the user does not supply any input. Under these conditions, the program is deterministic. The Pong program is the most advanced program supplied by the book and uses most of the features of the Jack programming language and OS. The assembled binary for the Pong program is 54065 static instructions. Pong provides the closest to a real-world application that a user of a simple computer like the Hack platform would be likely to encounter.

Table 5 13 micro synthetic benchmarks

|

Name |

Tests |

Instructions*

|

Description |

|

Array Fill |

Array

handling |

41958 |

Iterates over

a 10,000-element array and set each element to 1. |

|

Boot |

Operating

System |

41678 |

Simply boots

the system, does nothing and exits. |

|

Function

Calls |

Function

handling |

42763 |

Calls 7

different functions 10,000 times each. |

|

Head

Recursion |

Head

recursion |

42148 |

Computes the

23rd term of the Fibonacci sequence following a head-recursive

procedure. |

|

Long If |

If statement |

43395 |

Evaluates a

long (20 clause) if-else chain 10,000 times. |

|

Loop |

While

statement |

41809 |

Iterates over

a 10,000 iteration while loop. |

|

Mathematics |

Maths operations |

42374 |

Perform a

long series of mathematical operations during the course of 10,000 iteration

loop. |

|

Memory Access |

Memory

operations |

42158 |

Reads the

first 2048 memory addresses (virtual registers, static variables and the

stack), then writes to every memory address in the range 2048 – 24574 (heap

and video memory) before finally reading memory address 24575 (keyboard

memory map). |

|

Memory Read |

Memory reads |

41881 |

Reads every

memory address in the RAM. |

|

Memory Write |

Memory writes |

41893 |

Writes to

every memory address in the 2048 – 24574, which includes the heap and video

memory. |

|

Objects |

Object

handling |

42683 |

Instantiate,

call a member method and then dispose of a class 100 times. |

|

Output |

Output

performance |

41970 |

Prints 100

‘Z’ characters to the screen. |

|

Tail

Recursion |

Tail

recursion |

42100 |

Computes the

23rd term of the Fibonacci sequence following a tail-recursive

procedure. |

*Static

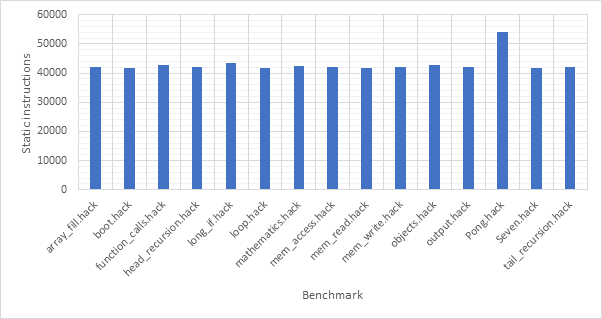

Staged Instructions (Base Model)

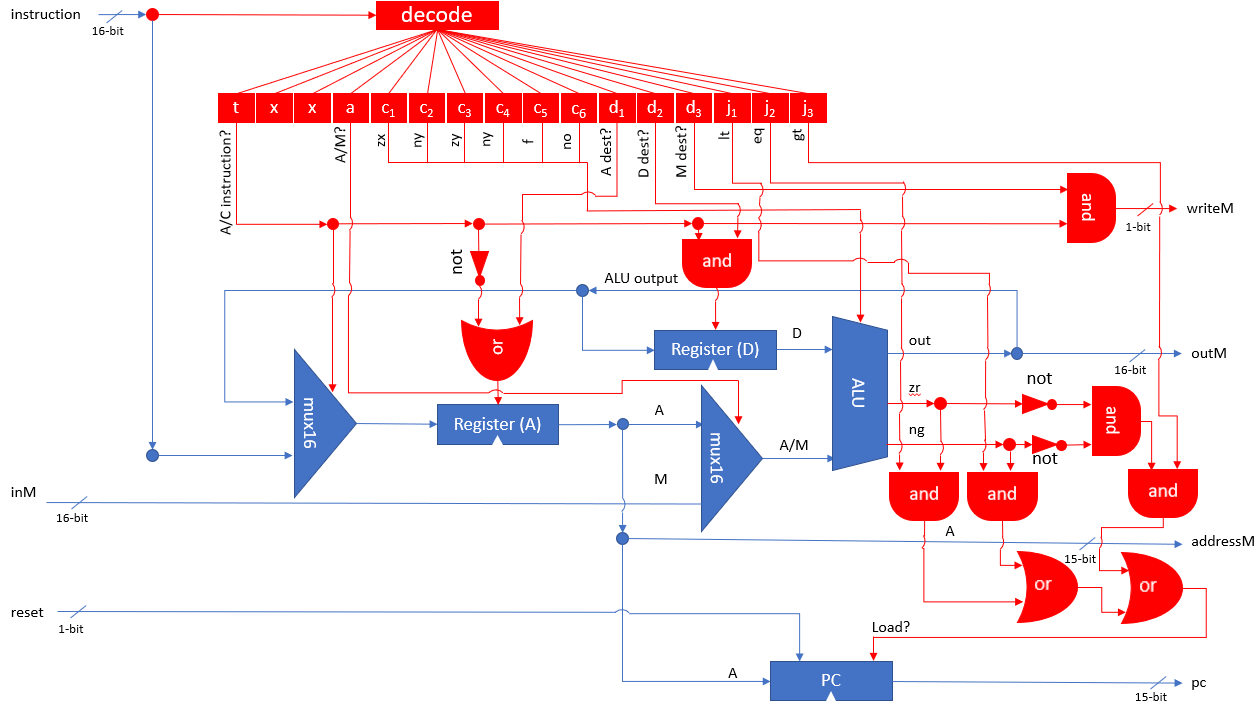

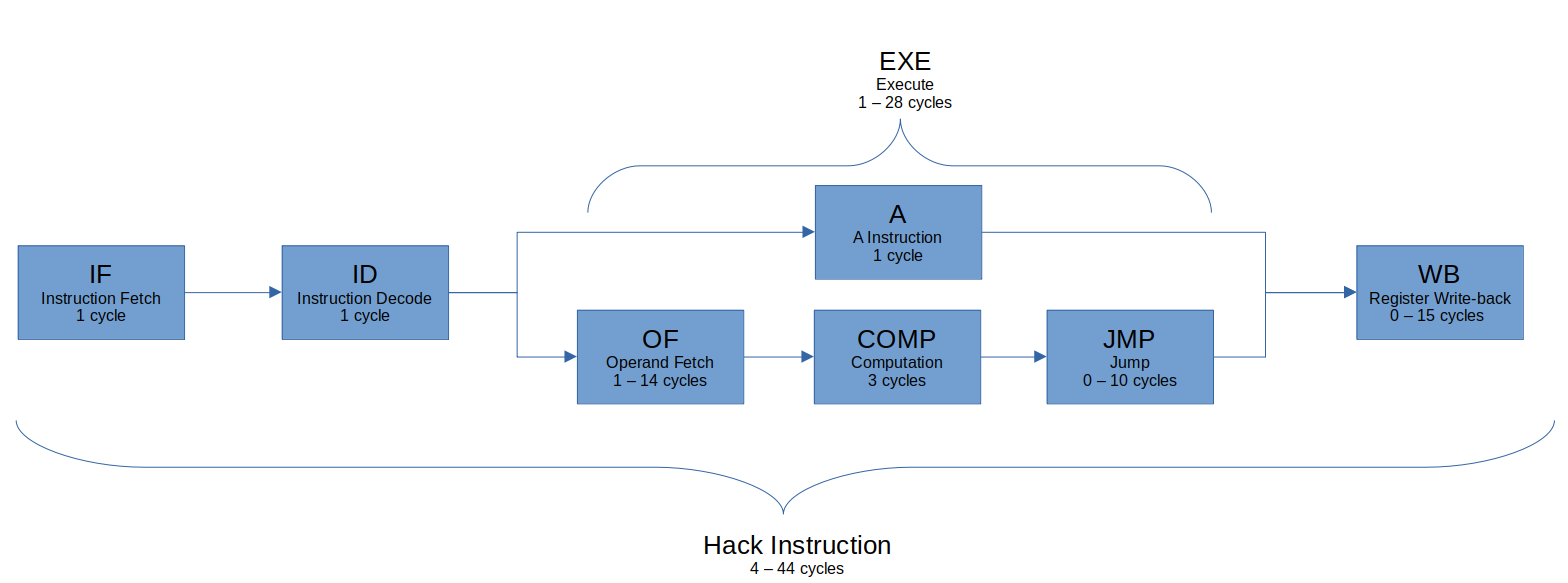

This section attempts to define a realistic schedule for how many cycles each instruction should take to complete based on the timings of the Intel 8086 shown in Table 4. This is done by splitting each single instruction into 4 stages and defining how many cycles each of these stages should take to complete in various circumstances. Taken together, this forms the base model which the optimisations in the remainder of this project attempt to improve upon.

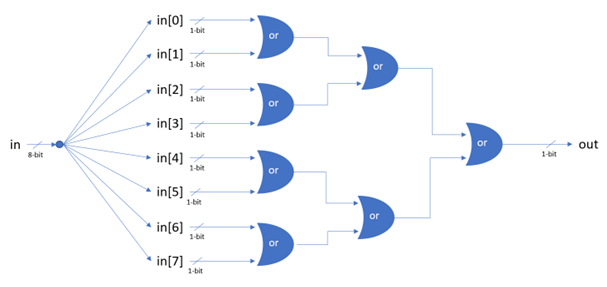

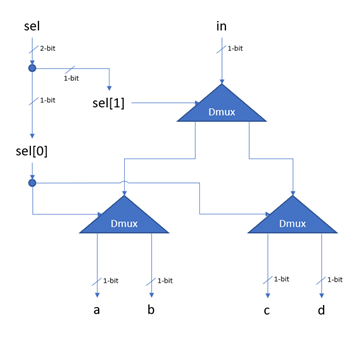

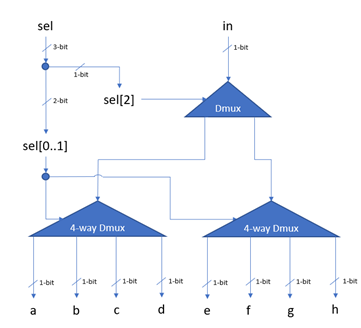

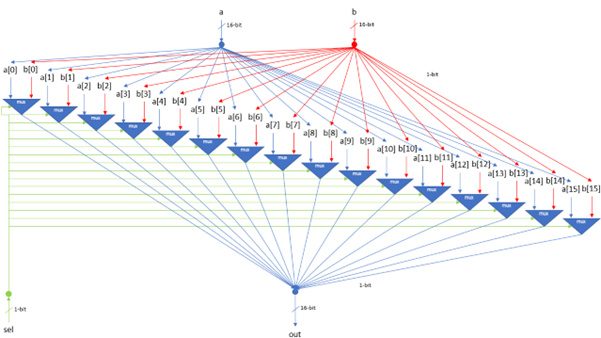

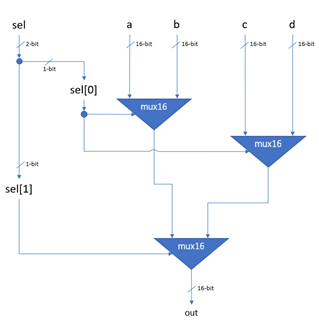

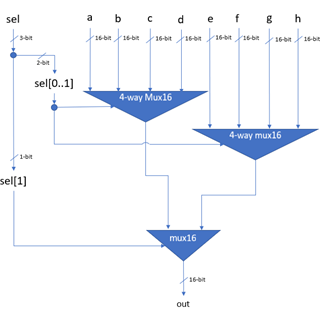

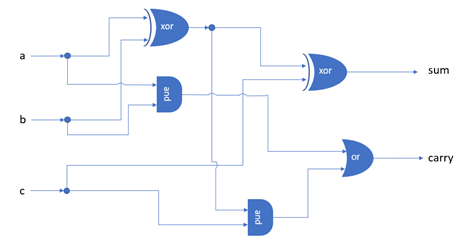

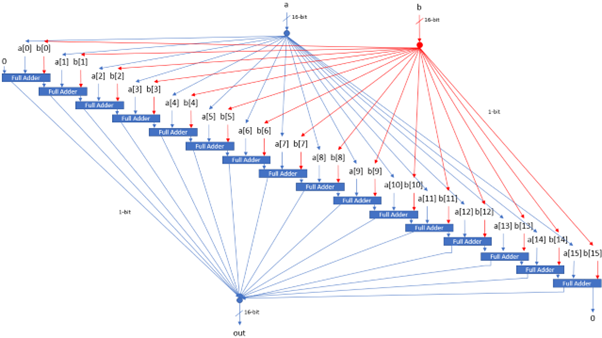

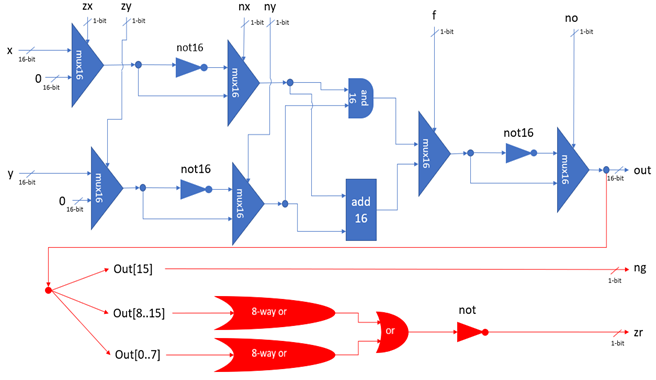

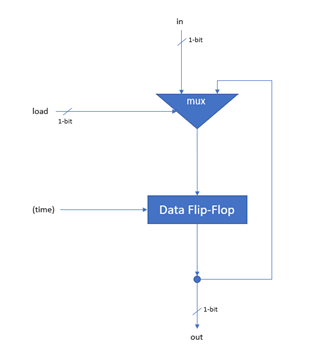

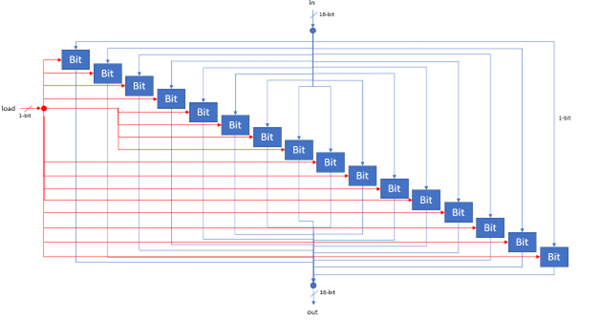

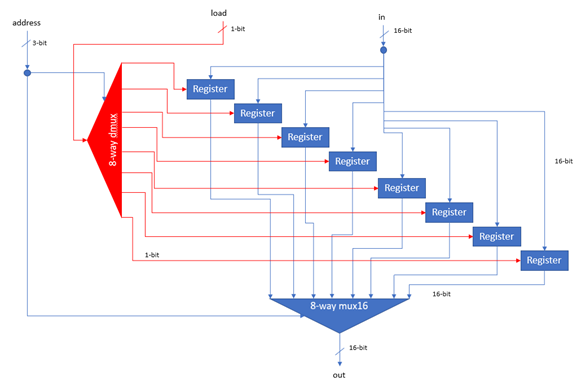

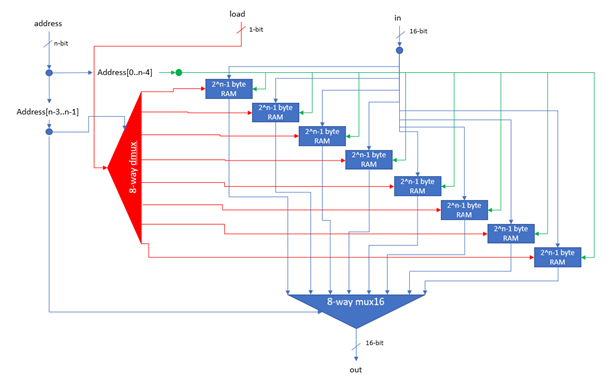

The four stages are Instruction Fetch, Instruction Decode, Execute and Write Back. The execute stage consists of 1 sub-stage in the case of an A-instruction, or 3 sub-stages in the case of a C-instruction (Operand Fetch, Computation and Jump). These stages are loosely based on the classic RISC 5-stage pipeline [26, 27], however cannot be considered a true “pipeline” yet, as only one instruction can occupy the pipeline at any one time. This one instruction can only be in one of the 4 stages at any given time and the remaining stages will be empty. When an instruction is executed, the control unit orchestrates the execution of each of the stages sequentially by instructing the various modules making up the CPU. Figure 8 summaries the various stages, note that A-instructions follow the top route along the Execute stage, whilst C-instructions follow the bottom route. Each stage is described in more detail in below. The system described in this section can be simulated by setting the control_unit parameter to “staged”.

IF – Instruction Fetch

- Completes in 1 cycle

- Retrieves the next instruction from ROM

- Increments the PC by 1

ID – Instruction Decode

- Completes in 1 cycle

- Determine if A or C instruction

EXE – Execute

- Completes in 1 – 28 cycles

- A-instruction: 1 stage (A) completes in 1 cycle

- C-instruction: 3 stages (OF, COMP, JMP) completes in 4 – 28 cycles

A – A Instruction

- Completes in 1 cycle

- Loads constant from instruction

OF – Operand Fetch

- Completes in 1 – 14 cycles

- Available operands: A, D, M

- If comp field refers to M, load value from RAM[A] (14 cycles)

- If comp field refers to A and/or D, load value from A and/or D (1 cycle)

COMP – Compute

- Completes in 3 cycles

- ALU computes output based on operands and control inputs (instruction)

JMP – JUMP

- Completes in 0 – 10 cycles

- Optionally transfers control to another part of the program

- If jump field unset, completes in 0 cycles

- If jump field set:

- Unconditional Jump: Set PC to value in A register (4 cycles)

- Conditional Jump: Determine if jump should be taken based on ALU flags (6 cycles):

- Taken: Set PC to value in A register (4 cycles)

- Not taken: No further action (0 cycles)

WB – Write Back

- Completes in 0 – 15 cycles

- Optionally write ALU output to (any combination of) A, D, M

- If A-instruction, write to A

- If C-instruction, proceed based on dest field

- If dest field unset, completes in 0 cycles

- If dest field contains A and/or D, completes in 1 cycle

- If dest field contains M, writes to RAM[A] and completes in 15 cycles

Pipeline

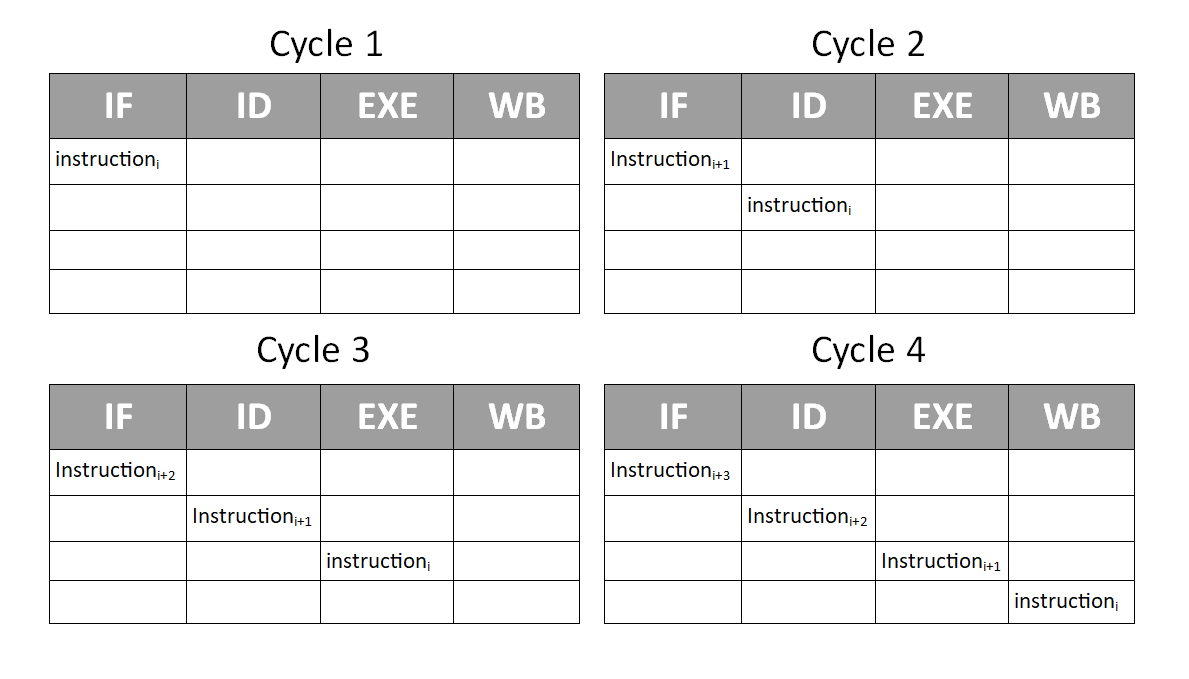

As the reference to the RISC 5-stage pipeline may have suggested, the staged instruction approach laid out in the previous section is positioned perfectly for a pipelined approach. Rather than waiting for an instruction to pass through all 4 stages before repeating the process for the next instruction, with only slight modifications, the system is able to process 4 instructions simultaneously, with one in each stage. This will be the first hardware level optimisation proposed by this project and will form the foundation for many of the other optimisations explored later in the project.

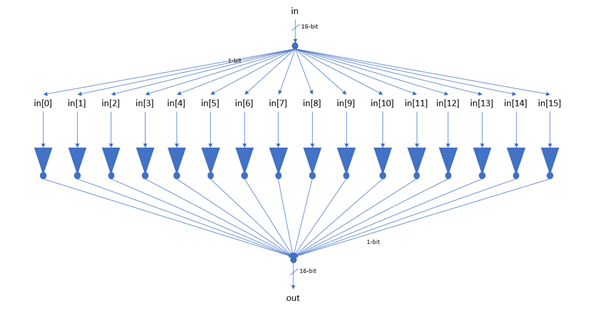

Under the pipeline approach, when instructioni is in the IF stage, instructioni-1 is in the ID stage, instructioni-2 is in the EXE stage and instructioni-3 is in the WB stage. For a simplified pipeline, where each stage takes exactly 1 cycle, this is shown in Figure 9.

All of the changes required to implement this pipelined approach can be implemented in the control unit module. The pipelined control unit must orchestrate the various processing unit modules making up the stages such that all four are (nearly) always busy. As well as tracking the contents of the each of the stages control unit must take action to avoid two major hazards.

The first hazard occurs when a jump is taken. When an instruction causes a jump to happen, the control unit is not aware of this until after the JMP sub-stage of the EXE stage, by which point it will have already decoded and fetched the next two instructions directly following the instruction causing the jump. Since a jump instruction transfers control to another part of the program, these two instructions should not be executed. In this case, a pipeline flush or simply a flush occurs. During a flush, the contents of the IF and ID stages are discarded and no further instruction is fetched until the contents of the EXE and WB stage have completed. A flush should be triggered whenever an unconditional or conditional jump that is taken occurs.

The second hazard occurs when an instruction reads from a register that an instruction in the EXE or WB stage is simultaneously writing to. In this case, the decode stage must stall the pipeline until the relevant instruction has completed the WB stage. When the pipeline is stalled, no further instructions can enter the IF, ID or EXE stages, but instructions already in the EXE stage may continue, whilst instructions are free to enter and execute the WB stage as usual. The decode stage stalls the pipeline if it is decoding a C-instruction and the following conditions are met:

- If any instruction in pipeline is an A-instruction or a C-instruction writing to the A register AND:

- Current instruction operates on A register OR

- Current instruction operates on M register OR

- Current instruction writes to M register

- If any instruction in pipeline is a C-instruction writing to the D register AND:

- Current instruction reads from D register

- If any instruction in the pipeline is a C-instruction writing to the M register AND:

- Current instruction reads from M register

The system described in this section can be simulated by setting the control_unit parameter to “pipeline”.

Pipeline Forwarding

Pipeline forwarding is an optimisation technique where earlier stages are able to take advantage of information produced by later stages in order to gain a cycle advantage. Two pipeline forwarding techniques are utilised in this project, which are discussed below. In order to take advantage of these techniques, a new forwarding-enabled control unit is required. To enable the forwarding-enabled control unit, set the control_unit parameter to “pipeline_forwarding”. Note that the pipeline forwarding control unit is also capable of branch predictions.

Simultaneous write back

In the original pipeline model, the write back stage occurs after the jump sub-stage of the execute stage has completed. The computation sub-stage of the execute stage determines the output, out of the ALU. The jump stage sets the program counter based on out and the write back stage writes out to any one (or none) of the 3 registers. Hopefully the reader can see that these two steps are mutually independent – both require out to be ready, but do not modify it. For instructions that contain possible branches, the simultaneous write back optimisation takes advantage of these facts and performs both the jump sub-stage and the write back stage at the same time. Since both stages have to potential to take a significant number of cycles, performing them simultaneously has the potential to greatly increase instruction throughput. The write_back_unit parameter should be set to “simultaneous” to employ this technique.

Operand Forwarding

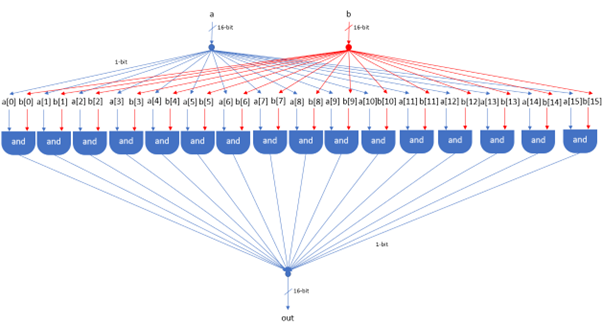

In the original pipeline model, if the decode stage encounters any instructions which rely on registers being modified by instructions in the execute or writeback stage, the pipeline is stalled until the later instructions have completed. This is because the operand fetch sub-stage of the execute stage cannot begin until all the registers it will access have been updated. The operand forwarding technique attempts to alleviate this problem by allowing the operand unit to retrieve the updated values for dependent registers directly from the write back unit, without waiting for it to finish writing this data back to the relevant register. This has the potential to reduce the amount of time the CPU spends stalled. Instructions are no longer stalled when dependent on an instruction in the write back stage and instructions dependent on an instruction in the execute stage are only stalled until that instruction enters the write back stage. However, this creates another problem – it is possible for memory reads (by the operand unit) to occur at the same time as memory writes (by the write back unit). This is alleviated by adding an additional memory unit, using one as a dedicated writer and the other as a dedicated reader. This solution has the side effect of creating two independent memory caches (if memory caching is enabled). The operand_unit parameter should be set to “forwarding” to employ this technique.

Memory Caches

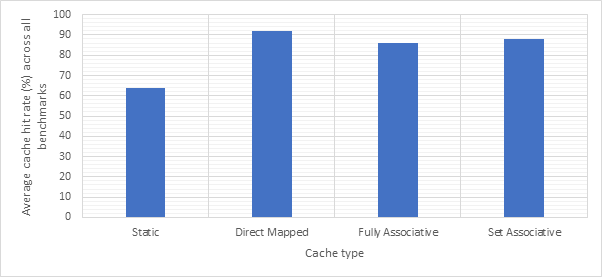

Based on the figures presented in Table 4, main memory is very slow, taking 15 cycles to write a value and 14 cycles to read a value. Therefore, the CPU would significantly benefit from having access to a bank of faster memory. All the memory caches simulated in this project are able to provide 1 cycle read/write access to any memory addresses that are “cached”.

Static Memory Cache

The static memory cache is the most basic memory cache explored in this project. The static memory cache caches the first x memory locations of the main memory, where x is the size of the cache. As the VM implementation frequently accesses data stored in the first 16 locations (e.g. location 0 is the Stack Pointer), substantial performance improvements are on offer. The static memory cache can be enabled by setting the memory_unit parameter to “static_x” where x is the desired size of the static cache.

Direct Mapped Cache

Direct mapped caches are the simplest class of caches that are able to cache any memory location within the main memory. Each memory location in the main memory is mapped to a single cache line in the memory cache. The exact cache line index is found by looking at the last x bits of the memory address, where x is log2 of the cache size. This index is determined by applying a bitwise AND mask to the memory address. When an address is accessed, if the address is currently cached, it can be accessed in a single cycle. If not, the access takes the standard number of cycles, but the address is brought into the cache ready for future accesses. If the relevant cache line is already occupied (capacity miss), the original occupant is evicted and replaced with the new address. The direct mapped cache can be enabled by setting the memory_unit parameter to “direct_mapped_x” where x is the desired size of the direct mapped cache.

N-Way Set Associative Cache

In a set associative cache, the cache is organised into sets, where each set contains a number of cache lines. The set associative cache has two parameters, size and ways. The ways parameters specifies the number of cache lines in each set. Therefore, the number of sets is determined by dividing size by ways. For example, a 2-way 16 line set associative cache would have 8 sets. Each memory location in main memory is mapped to a single set, however could occupy any of the lines within that set. The set index is found by looking at the last x bits of the memory address, where x is log2 of the number of sets, this is also determined using a bitwise AND mask. This organisation increases the complexity of the cache, but reduces conflicts and therefore capacity misses. Much like the direct mapped cache, when an address is accessed, if the address is currently cached, it can be accessed in a single cycle. If not, the access takes the standard number of cycles, but the address is brought into the cache ready for future accesses. If the relevant set has an unoccupied cache line, the address is brought into that empty line. If, however, the set is already fully occupied, one of the existing occupants is evicted and replaced by the new address. The cache line to be evicted is determined by the cache eviction policy. Note that a direct mapped cache is a 1-way set associative cache. The direct mapped cache can be enabled by setting the memory_unit parameter to “n_way_set_associative_x_y” where x is the desired number of ways and y is the desired size of the n-way set associative cache.

Fully Associative Cache

In a fully associative cache, any memory location can be cached in any cache line. This scheme offers the most flexibility and therefore the least capacity misses, however is the most complex cache and hence the most difficult/expensive to implement in hardware. In the real world, this additional complexity usually comes at a cost – longer lookup time when compared with other caches. This additional lookup time has not been considered in these simulations. As with the previous two caches, when an address is accessed, if the address is currently cached, it can be accessed in a single cycle. If not, the access takes the standard number of cycles, but the address is brought into the cache ready for future accesses. If there is an unoccupied cache line anywhere within the cache, the address is brought into that cache line. If not, one of the cache lines is evicted and the new address is brought into that cache line. The cache line to be evicted is determined by the eviction policy. Note that a fully associative cache is a special case of the n-way set associative cache, where n is equal to the size of the cache. The fully associative cache can be enabled by setting the memory_unit parameter to “fully_associative_x” where x is the desired size of the fully associative cache.

Eviction Policies

Eviction policies are used to determine which cache line should be evicted to make way for a newly accessed memory location. These policies are employed by the N-way set associative cache to determine which cache line within a set should be evicted, whilst they are employed by the fully associative cache to decide which cache line within the entire cache should be evicted.

FIFO

When using the First In, First Out (FIFO) eviction policy, cache lines are organised into a queue in the order they were brought into the cache. When a new address is cached, it is placed at the back of the queue. When it is time to evict a cache line, the cache line that was occupied least recently and is therefore at the front of the queue, is evicted.

LIFO

The Last In, First Out (LIFO) eviction policy is similar to the FIFO eviction policy in that cache lines are still organised into a queue in the order they were brought into the cache. However, when it is time to evict a cache line, the cache line that was occupied most recently and is therefore at the back of the queue is select for eviction.

Least Recent

The least recent eviction policy also organises cache lines into a queue, however unlike the previous two policies, the queue is ordered by when the cache line was last accessed rather than when it was brought into the cache. This means that when accessing an address that has already been cached, the cache line is moved to the back of the queue. When it is time to evict a cache line, the cache line at the front of the queue is selected. This means the cache line that was accessed least recently is evicted.

Most Recent

The most recent eviction policy is similar to the least recent eviction policy; however, it evicts the most recently accessed cache line instead of the least. The cache lines are kept in a queue following the same rules as the least recent policy, but evictions are made from the front of the queue instead of the back.

Branch Prediction

Under the standard pipeline model, the pipeline must be flushed every time a branch is taken. This is because the fetch and decode stages will already contain instructions i+1 and i+2 when it should contain instructions t and t+1, where i is the (program counter) address of the instruction containing the jump and t is the target address of the branch. This situation arises because the fetch and decode stages are unaware that there will be a jump in instruction i until the execute stage has concluded for that instruction, by which time they will already have loaded the subsequent instructions i+1 and i+2.

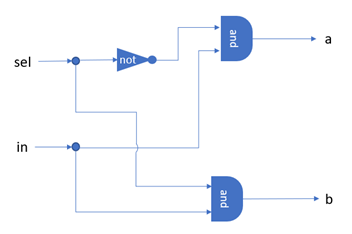

Branch prediction is an optimisation technique that attempts to alleviate this problem. During the decode stage, the decode unit determines if an instruction contains a branch. If it does contain a branch, the decode unit makes a prediction about the outcome of the branch (taken or not taken). Unconditional jumps are always predicted to be taken.

If the jump is predicted not taken, then no further action is taken and the program proceeds to the next instruction as normal.

If the jump is predicted to be taken, then the decode unit also makes a prediction about the target of the jump. The program counter is then updated to the predicted target of the jump and a “half-flush” is triggered. During a “half-flush” only the fetch unit is cleared. During the next cycle, the fetch unit will fetch the next instruction from the predicted target address.

Once the execute stage concludes, the actual branch outcome and branch target are compared to their predictions. If they are equal, then no further action is needed and a number of cycles are saved. If the predictions were wrong, then a pipeline flush is triggered so that the correct instructions can be retrieved. Therefore, the flush is avoided as long as both the outcome and target prediction are correct.

Note that most modern architectures support both direct and indirect branches. In a direct branch, the branch target is contained within the branch instruction. During an indirect branch, the branch target is contained elsewhere, typically within a register. This means that branch target prediction is only needed for indirect branches. However, the Hack architecture only supports indirect branching – the branch target is always the value of the A register at the time the jump is taken. As a result, for the Hack architecture, branch outcome prediction is only useful when employed in combination with branch target prediction.

Branch prediction requires changes to both the control and decode units. The prediction-enabled pipeline can be enabled by setting the control_unit parameter to “prediction_pipeline” in the simulator configuration. There are a number of different outcome and target prediction strategies, which are discussed below. These can be enabled by setting the decode_unit parameter to the relevant strategy. However, as discussed previously, it is not useful to have an outcome predictor without a target predictor, so both an outcome and target strategy should be specified. This can be done by including both strategies within the decode_unit parameter, separated by an underscore, e.g., “gshare16_fifo2bit16”.

Branch Outcome Prediction

A branch outcome predictor produces a prediction of either taken or not taken for a given instruction address (prediction). After the branch is fully resolved, the predictor is told the actual outcome of the branch so that it can make any relevant updates (confirmation).

Never Taken

This is the simplest prediction strategy and in fact is the behaviour already exhibited by the pipeline. Branches are always assumed to be not taken and the instruction after the branch instruction is loaded. The only benefit to this strategy over not using the prediction-enabled pipeline is that target predictions will be made and acted upon in the case that the branch is unconditional if a target predictor has been enabled. The never taken strategy ignores any confirmations it receives and is therefore a static strategy. The decode_unit parameter should be set to “nevertaken” to employ this strategy.

Always Taken

The always taken strategy predicts taken in all circumstances. Like the never taken strategy, it ignores all confirmations and is therefore a static strategy. This strategy should be correct approximately 50% of the time, however this will depend on the program being executed. If the compiler is aware that the always taken strategy is in use, code can be reorganised to take advantage of the fact that all branches will be assumed to be taken. The decode_unit parameter should be set to “alwaystaken” to employ this strategy.

Global 1-bit predictor

The global 1-bit predictor is the first dynamic branch prediction strategy explored by this project. This predictor simply predicts the same outcome as the last branch that was taken (regardless of instruction address). This is done by storing the outcome of the last branch and returning when a prediction is requested. This outcome is updated when receiving a confirmation. The decode_unit parameter should be set to “global1bit” to employ this strategy.

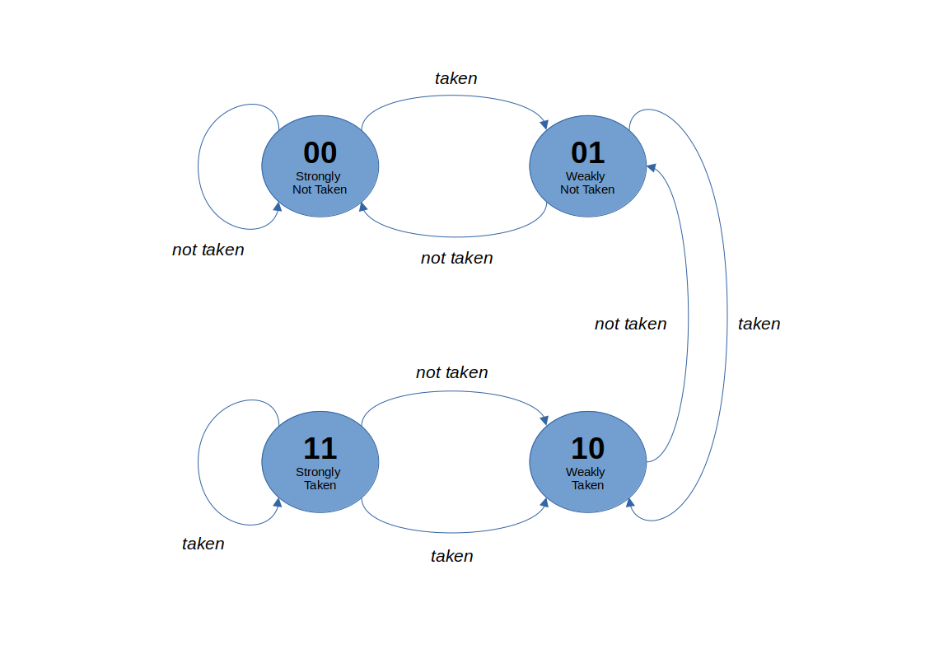

Global 2-bit predictor

The global 2-bit predictor is a dynamic strategy similar to the global 1-bit predictor. This predictor utilises a 2-bit saturating counter. This 2-bit counter capable of storing the values 0-4 (inclusive). If the counter is 0 or 1, the predictor will predict not taken, whilst if the counter is 2 or 3, the prediction is taken. When a confirmation is received, the counter is incremented if the branch was in fact taken and decremented if it was not. This is illustrated in Figure 10. When compared to the 1-bit prediction strategy, this has the benefit of making the prediction more stable by requiring two mispredictions in a row in order to change the predicted outcome. The decode_unit parameter should be set to “global2bit” to employ this strategy.

Local 1-bit predictor

The local 1-bit predictor is a dynamic strategy that holds a prediction table of a size specified by the size parameter. Each (program memory) address is mapped to one of the cells in the table. The specific table index is found by looking at the last x bits of the address where x is equal to log2 of the table size and is determined using a bitwise AND mask. Each cell contains a boolean prediction, equal to the outcome of the last branch instruction that had an address mapping to the same cell. This outcome is updated when a confirmation is received. The decode_unit parameter should be set to “local1bit[size]” to employ this strategy, where [size] should be replaced by the desired table size.

Local 2-bit predictor

The local 2-bit predictor is a dynamic strategy similar to the local 1-bit predictor strategy in that is also holds a prediction table and each address is mapped to one of the cells in the table. However, instead of storing a boolean outcome in the table, a 2-bit saturating counter is stored. This counter is updated in the same way as the 2-bit global strategy. The decode_unit parameter should be set to “local2bit[size]” to employ this strategy, where [size] should be replaced by the desired table size.

Gshare

Gshare or Global Sharing, is a dynamic strategy that attempts to combine the benefits of the global and local predictors, by employing a hybrid approach enabling it to leveraging both global and local information. Gshare also holds a prediction table of a size specified by the size parameter, when each cell contains a 2-bit saturating counter. In addition to this, the gshare predictor maintains a global history (shift) register (GHR). This register stores the outcomes of every branch in the form of a binary number where each digit represents a taken (1) or not taken (0) outcome. When the predictor receives a confirmation, it updates the GHR by shifting it one place to the right and then setting the last bit to true if the outcome was taken and leaves it as false if not. The relevant 2-bit counter in the prediction table is also updated. The index to the prediction table is found by XORing the last x bits of the (program memory) address and the last x bits of the GHR, where x is log2 of the size of the prediction table. The decode_unit parameter should be set to “gshare[size]” to employ this strategy, where [size] should be replaced by the desired table size.

Branch Target Prediction

A branch outcome predictor produces a target address prediction for a given instruction address (prediction). After the branch is fully resolved, the predictor is told the actual target address of the branch so that it can make any relevant updates (confirmation).

1-bit target predictor

The 1-bit target predictor acts similarly to a memory cache. It maintains a target buffer of a size specified by the size parameter. Each entry in the buffer contains an instruction address and the target address of that instruction last time it was executed. When a confirmation is received, this target address is updated. If a prediction is requested for an address that is not already in the cache, a prediction of target address 0 is produced and the address is added to the buffer so that it is ready for next time. In this way, the target buffer stores the last size branch instruction and their targets. If a new address is added when the buffer is full, one of the existing entries is evicted according to the selected eviction policy. The policies available are the same as the ones used by the set associative and fully associative memory caches. The decode_unit parameter should be set to “[eviction_policy]1bit[size]” to employ this strategy, where [eviction_policy] should be replaced by the desired eviction policy (fifo, lifo, mostrecent or leastrecent) and [size] should be replaced by the desired table size.

2-bit target predictor

The 2-bit target predictor is similar to the 1-bit target predictor: it also maintains a target buffer of a size specified by the size parameter, uses the same eviction strategies to make room once the buffer is full and when a prediction is requested, the addresses is looked up in the cache and the respective target address is produced, or 0 if the address does not appear in the target buffer. However, in addition to storing the instruction address and target address in the buffer, a 2-bit counter is also stored. When a confirmation is received, the counter is incremented by 1 if the predicted target was correct and decremented by 1 if it was wrong. The stored target is only updated once the counter reaches 0. When a target is updated, or a new entry added to the target buffer, the counter is (re)initialised to 1. The decode_unit parameter should be set to “[eviction_policy]2bit[size]” to employ this strategy, where [eviction_policy] should be replaced by the desired eviction policy and [size] should be replaced by the desired table size.

Results

Methodology

This section aims to use graphs and explanatory text to showcase, explore and compare the effects of the optimisations discussed in this project. This has been done by statistically analysing performance data generated during simulated runs of various different configurations. Each of the configurations have a different combination of optimisations enabled.

Two python scripts were developed for the purposes of gathering data. The source code for both of these scripts can be found in Appendix A: Links. The first script, generate_configs.py, is used to generate batches of simulator configurations where each configuration is one permutation of the possible CPU components paired with one of the benchmark programs. Each possible permutation has one simulator configuration for each of the 15 benchmarks. The components included in these permutations are specified as a parameter. The second script benchmarker.py is used to run an arbitrary number of configurations on the simulator and collate all the results into a CSV file. The script has the parameter batch size, which specifies how many simulations should be run simultaneously.

Initially, it was intended to run simulations on every possible combination of CPU units. For components that have a size parameter, the values used were 8, 16, 32, 64, 128 and 256. For the ways parameter in n-way set associative memory caches, the values used were 2, 4, 8 and 16. This resulted in 7.5 million different configurations, too many to simulate in a reasonable time frame.

Instead of running all 7.5 million simulations, around 18,000 simulations, organised into 4 sets, were run. The first included every possible permutation of components, excluding the memory unit and decode unit which were set to basic. This generated only 75 configurations which could be simulated in a small amount of time. The second set included every possible permutation of memory unit with every other component set to basic. This generated around 1,800 configurations and took approximately 1 hour to simulate. The third set included every possible permutation of decode unit with every other component set to basic. This generated around 16,000 configurations and took approximately 10 hours to complete. The final set consisted of 8 manually selected configurations, resulting in 120 configurations which also completed in a short period of time. Every simulation used the same parameter settings with the obvious exception of the ROM path, as shown in Figure 11.

Base configuration

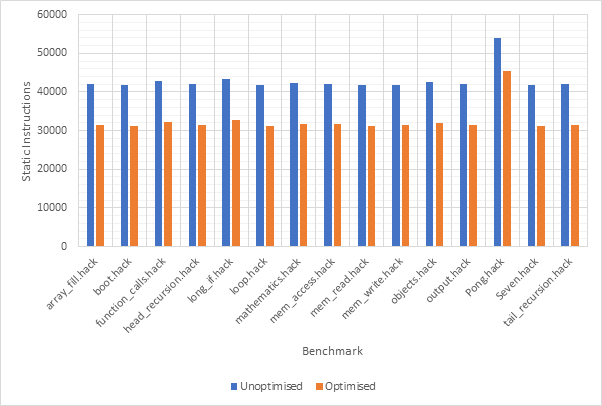

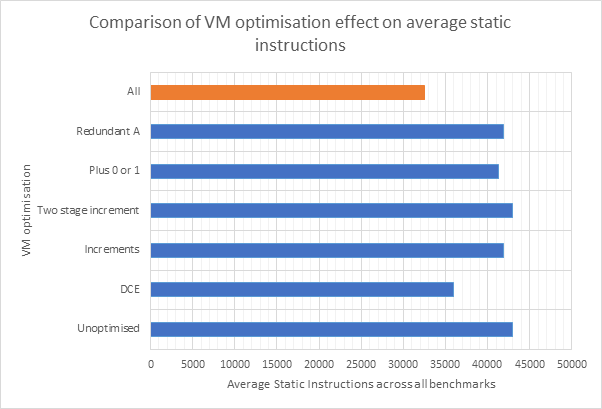

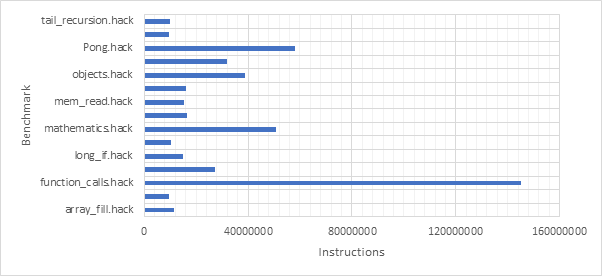

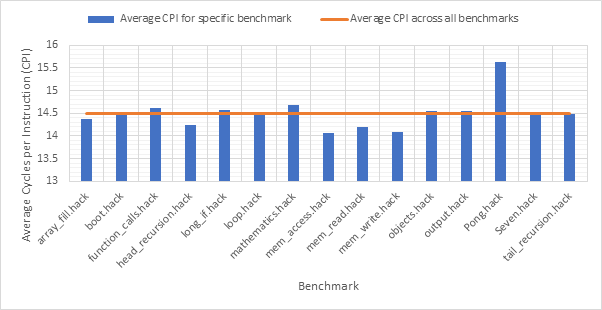

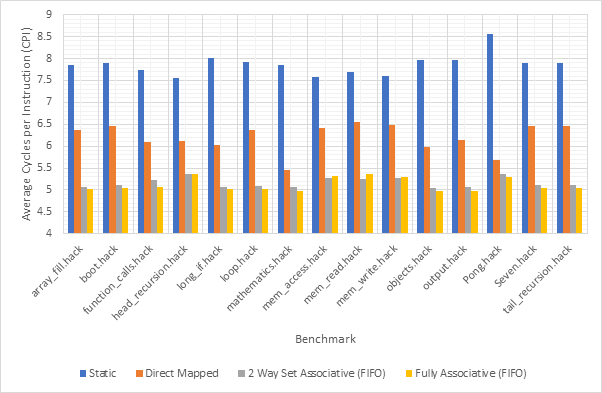

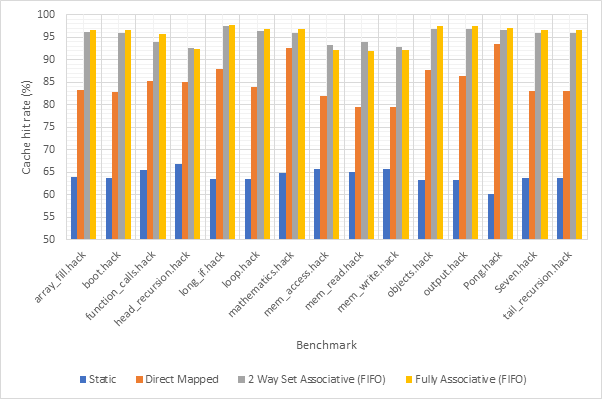

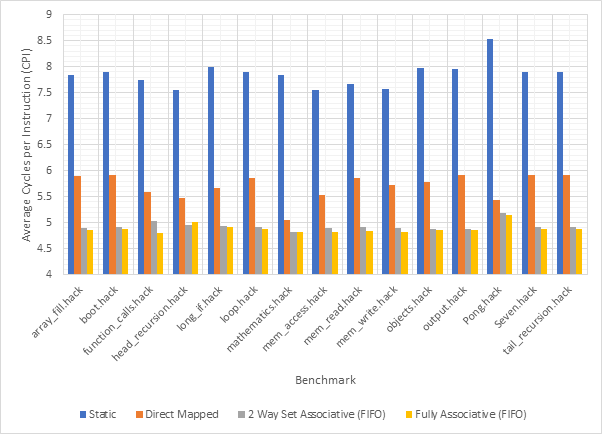

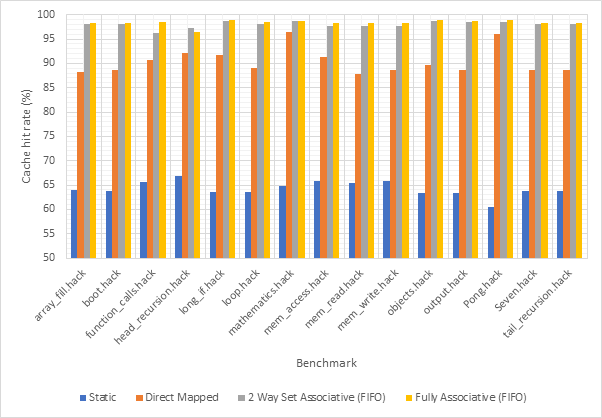

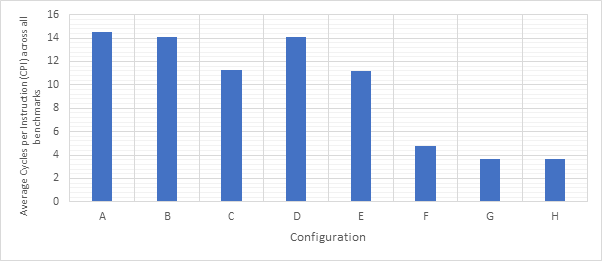

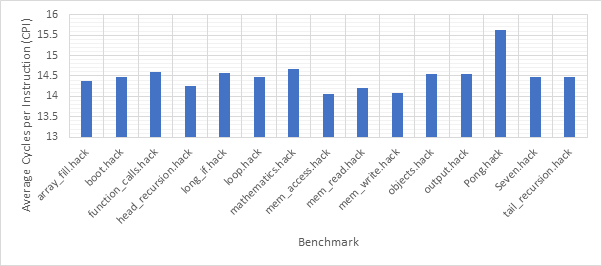

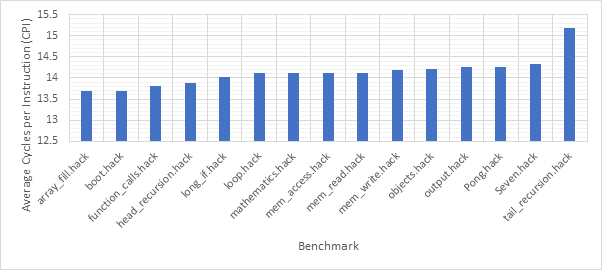

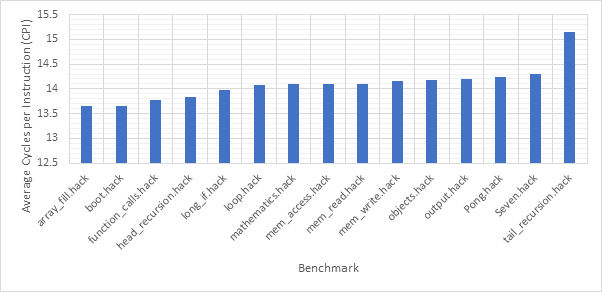

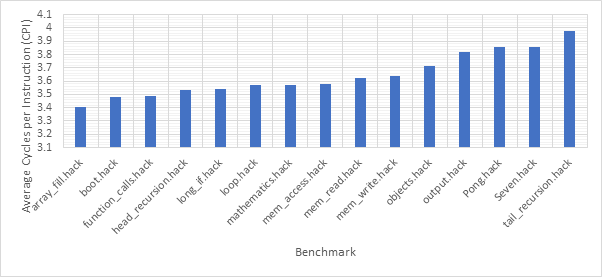

Before exploring any of the optimised configurations, it makes sense to take a look at the base configuration and the benchmark programs in order to gain a frame of reference. Figure 12 shows the number of dynamic instructions completed during the runtime of each of the benchmark programs. None of the optimisations explored in this project reduce the number of instructions completed by the CPU and so these numbers will hold for every optimised configuration. Figure 13 shows the CPI performance of the base configuration on each of the benchmarks. A lower CPI indicates a better performance. The red line shows the average CPI performance across all of the benchmarks. This then, approximately 14.5, is the number to beat.

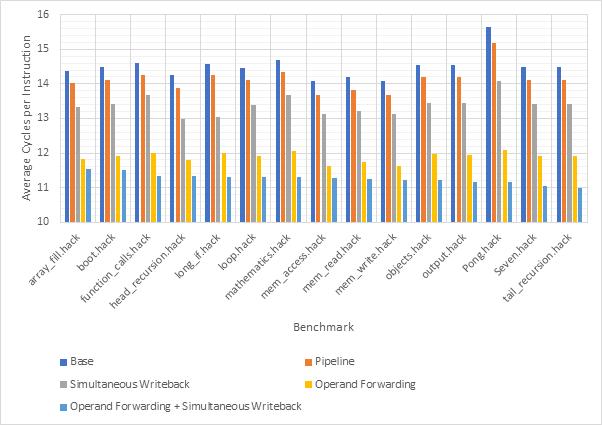

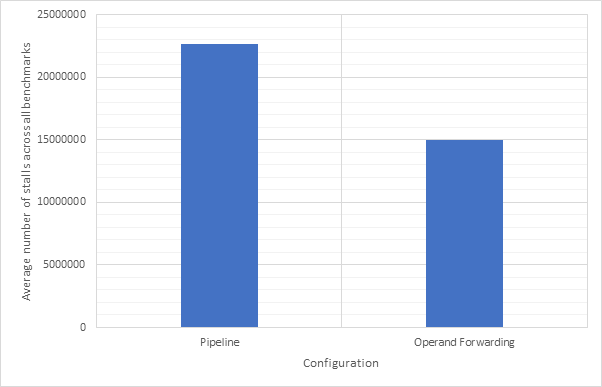

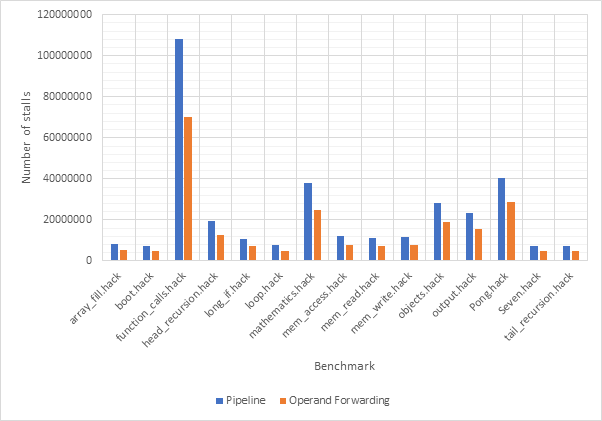

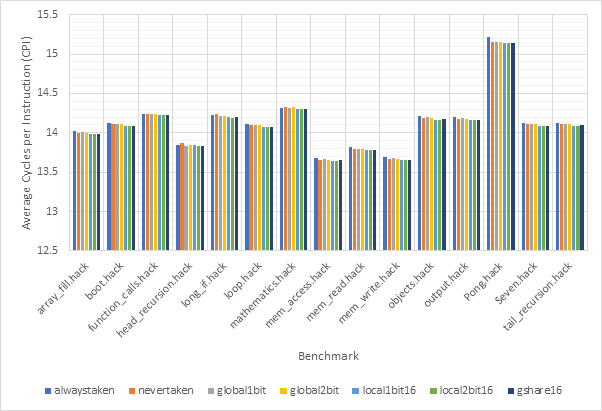

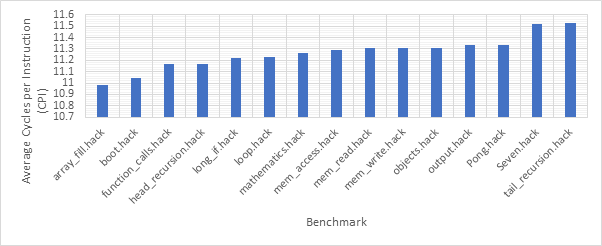

Pipelines

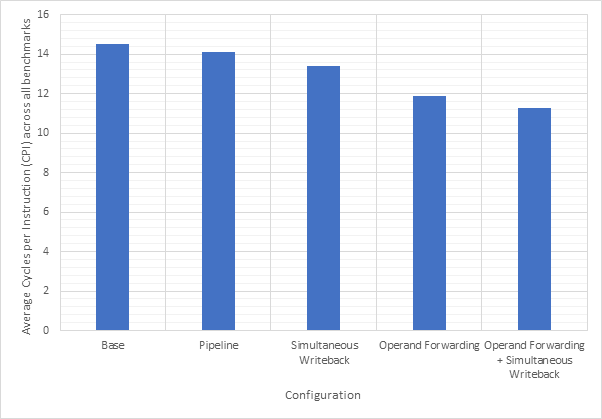

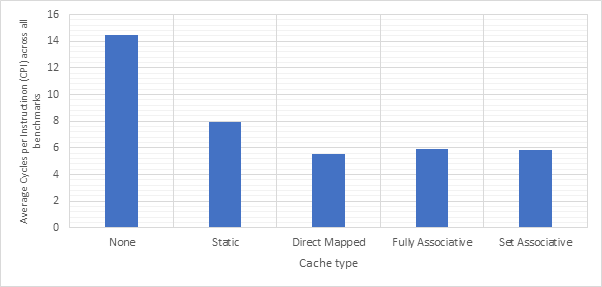

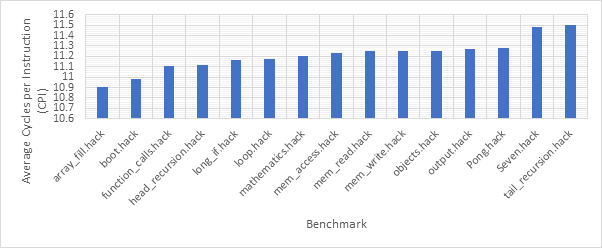

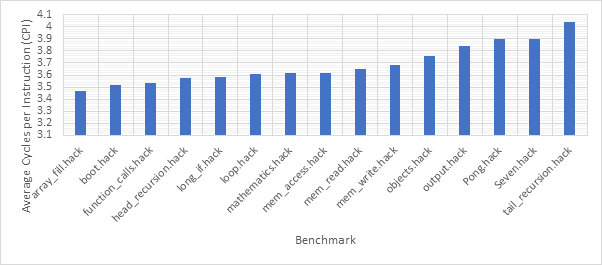

Figure 14 shows the average CPI across all 15 benchmarks for the base configuration, pipelined configuration, pipeline w/ simultaneous writeback, pipeline w/ operand forwarding and pipeline w/ simultaneous writeback and operand forwarding. Simultaneous writeback and operand forwarding are both pipeline forwarding techniques. We can see that enabling pipelining only offers a fairly paltry 2.5% performance improvement. This is likely because the pipeline spends a significant number of cycles stalled waiting for the execute or writeback stage to conclude. Both pipeline forwarding techniques help to alleviate this. The simultaneous writeback technique enables the jump sub-stage to occur at the same time as the writeback stage, effectively reducing the number of cycles required to complete the execute stage for instructions that include a jump. Whilst this only affects a relatively small number of instructions, this optimisation offers a further 5% performance improvement over the pipeline configuration and 8% improvement over the base configuration. The operand forwarding technique directly reduces the number of stalls by eliminating the need to wait for the writeback unit by enabling the operand fetch stage to retrieve operands directly from the writeback unit. This would affect a larger number of instructions than the simultaneous writeback optimisation and this results in a much larger performance improvement of 16% over the pipelined configuration and 18% over the base configuration. Since these optimisations target different areas of the CPU, they synergise well and enabling them both offers a 20% improvement over the pipelined configuration and a 22% improvement over the base configuration. Figure 15 shows the same information, but individually for each benchmark, rather than an average. Figure 16 shows the improvement in the average number of stalls across all benchmarks after enabling operand forwarding, whilst Figure 17 shows this for each benchmark individually. These graphs show that the CPI performance improvement achieved when enabling operand forwarding is due to the substantial reduction in the number of stalls.

Branch Prediction

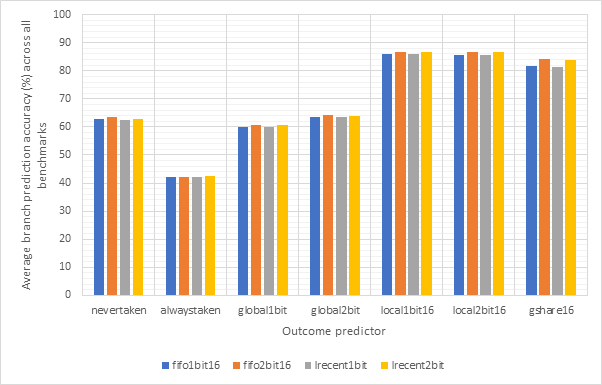

Outcome Prediction

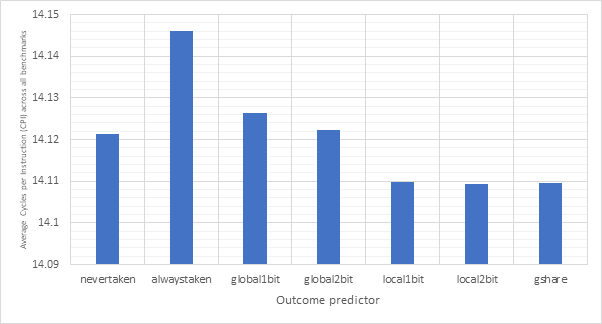

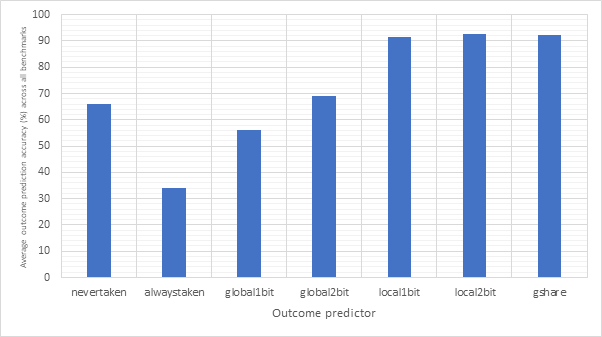

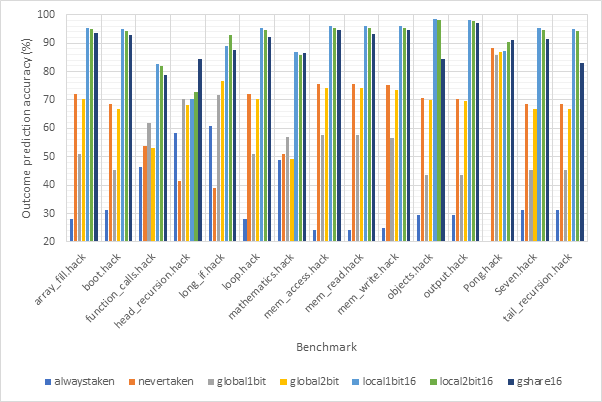

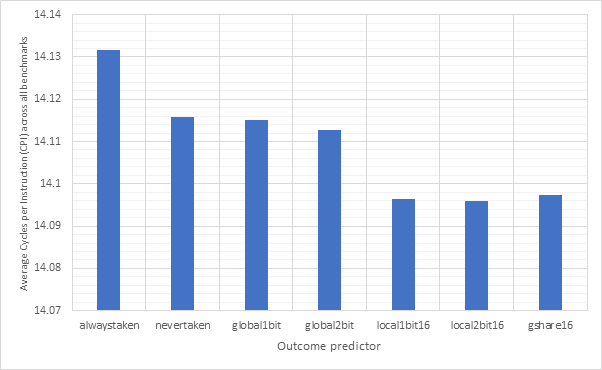

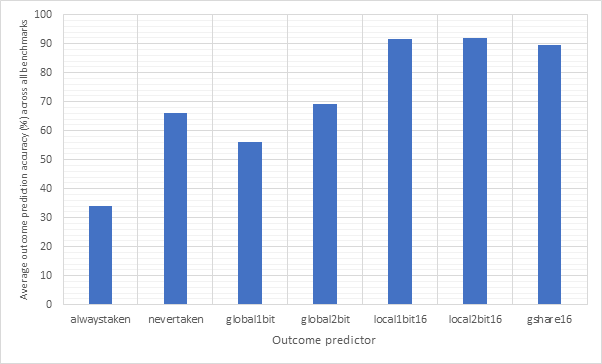

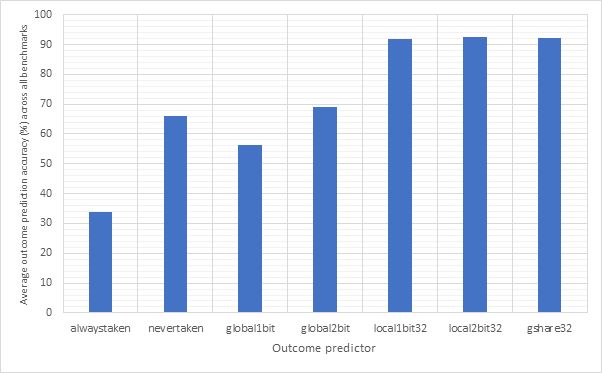

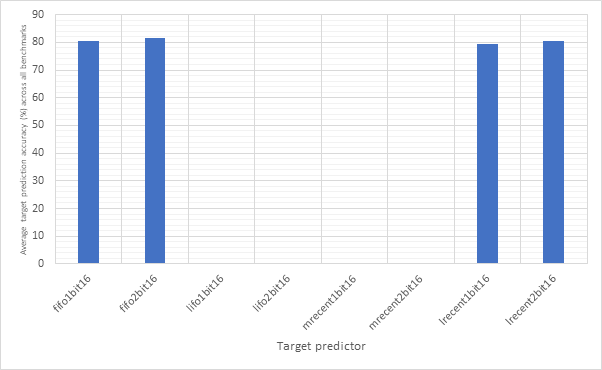

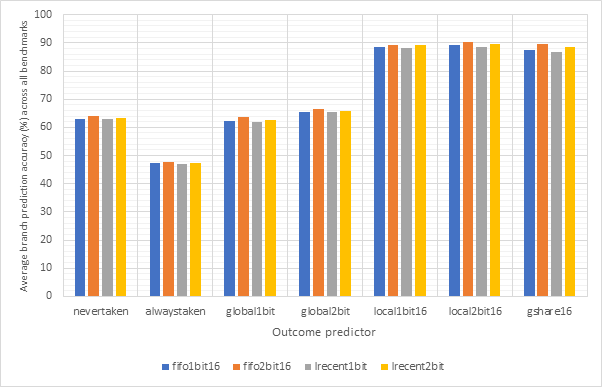

Figure 18 shows the average CPI performance of all configurations utilising each of the 7 outcome predictors. This data includes every target predictor and so may be somewhat skewed in the case that an outcome prediction is correct but the target prediction is wrong. For predictors that have a size parameter (local1bit, local2bit and gshare), this data also includes every size. Figure 19 shows the same configurations, but considers outcome prediction accuracy instead of CPI. These two graphs are highly and inversely correlated. A high prediction accuracy results in a lower CPI. Note the y-axis scale on the CPI graph - none of these strategies are having a large influence on the overall performance of the system.

Never taken is effectively the strategy taken by non-branch prediction-enabled pipelined configurations and so even though never taken is correct in around 70% of cases, it only derives a performance advantage when dealing with unconditional jumps. Conversely, the always taken strategy would derive a performance improvement when both outcome and target are correctly predicted, however due to the low accuracy of this predictor, it results in degraded performance. Neither the global1bit or global2bit strategies are able to exhibit a performance improvement over the (pipelined) nevertaken configuration suggesting that jumps in the benchmark programs are not highly correlated globally. The local1bit, local2bit and gshare strategies all achieve similar performance with the gshare strategy achieving a slightly lower performance and accuracy then the local2bit predictor. This suggests that jumps in the branch programs are more locally correlated then they are globally.

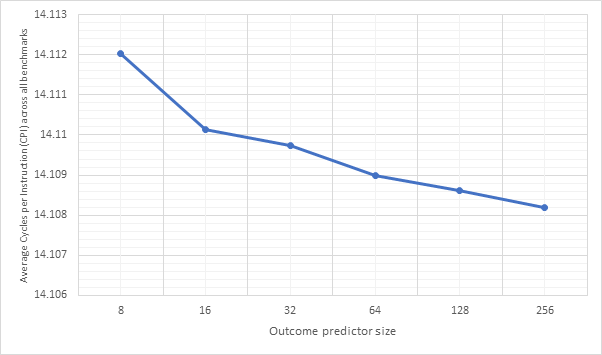

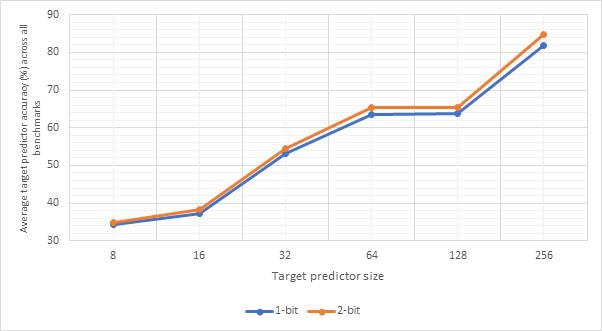

Figure 20 compares the performance of local1bit, local2bit and gshare, the highest performing strategies, by size. Figure 21 shows the same configurations but compares prediction accuracy. Here, we see a high correlation between outcome accuracy and predictor size. Unsurprisingly, larger predictors exhibit a higher accuracy and better performance, although these types of predictors would be more expensive. The graphs seem to show diminishing returns as size continues to increase, with the most pronounced improvement between sizes 8 and 16.

Figure 22 and Figure 23 show the CPI performance and outcome accuracy respectively for 16-line local1bit, local2bit and gshare strategies as well as the 4 unsized strategies for each benchmark individually. In this case the target predictor is set to fifo2bit256, the most accurate target predictor, to try to reduce the influence of the target predictor on these results. Here we can see once again that the 3 sized strategies outclass the other strategies and once again local2bit consistently outperforms the gshare strategy. The only exception to this is the Pong benchmark, perhaps showing that real-world benchmarks are more globally correlated then the synthetic benchmarks used in this project.

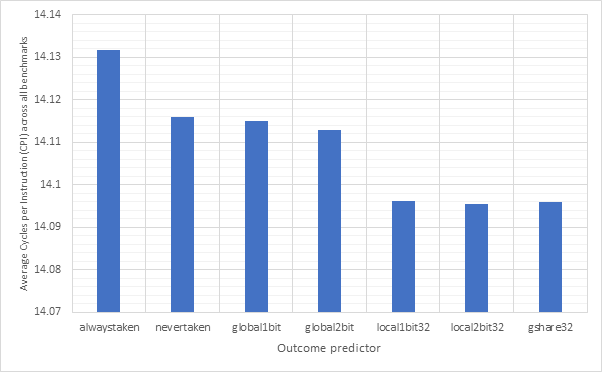

Figure 24 and Figure 25 are similar to the previous two graphs, but show averages across all of the benchmarks. Meanwhile, Figure 26 and Figure 27 show the same information, but for 32-line sized predictors. Here we can see that local1bit, local2bit and gshare remain the strongest predictors, however the performance improvements from 16-line to 32-line are fairly negligible with only a 0.003% CPI and 0.6% accuracy improvement between the 16-line and 32-line local2bit strategies, suggesting 16 may be a sweet spot.

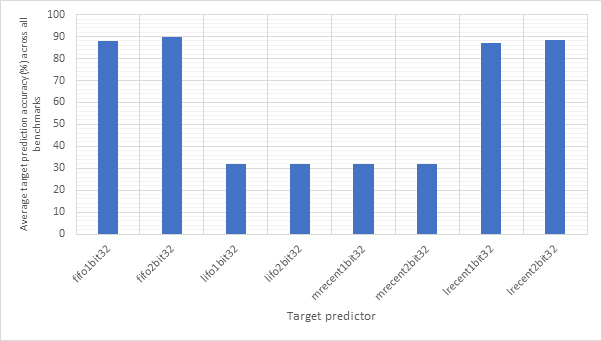

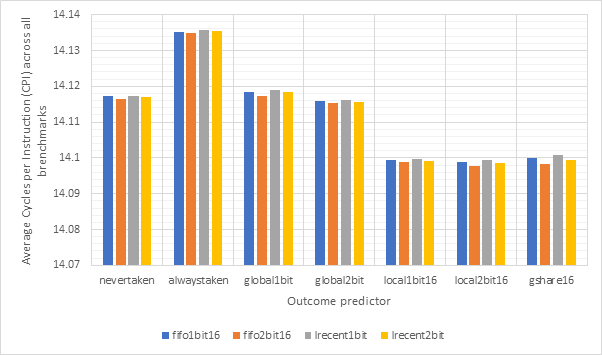

Target Prediction

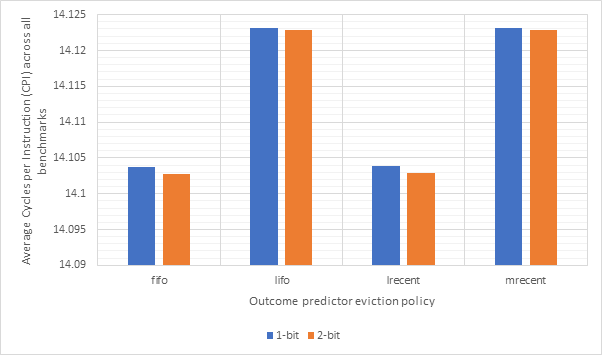

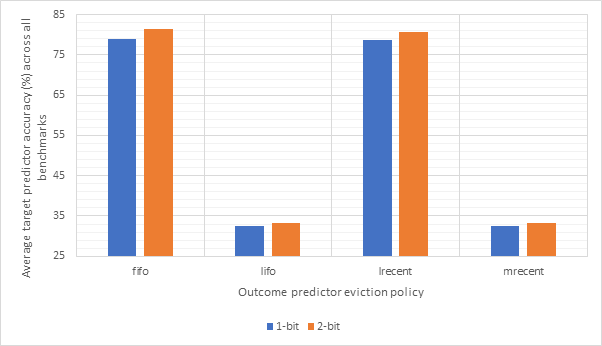

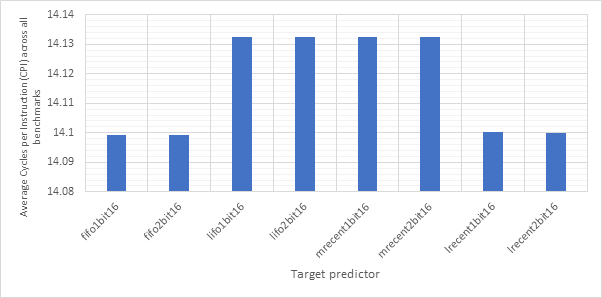

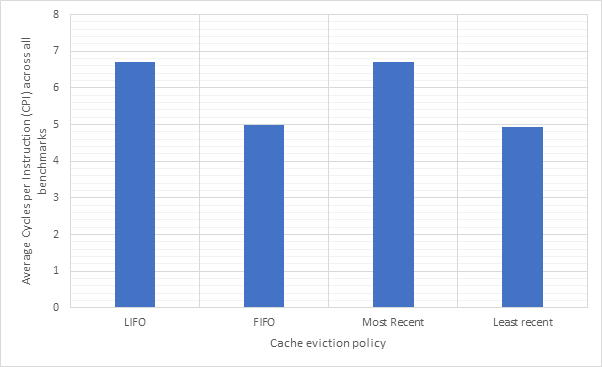

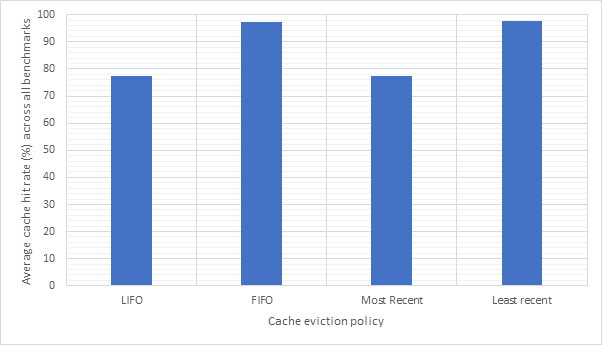

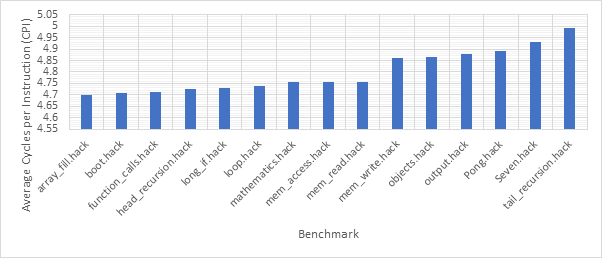

Figure 28 and Figure 29 compare the CPI performance and target prediction accuracy for each of the 2 types (1-bit and 2-bit) and the 4 eviction policies across all benchmarks. Here, we can see that CPI performance and target prediction accuracy are highly and inversely correlated. We can also see that the fifo and lrecent policies have similar performance to each other, as do the lifo and mrecent strategies. This is likely because both fifo and lrecent look to evict less recently used items, whilst lifo and mrecent evict more recently used items. Fifo and lrecent dramatically outperform lifo and mrecent in terms of accuracy, although all the CPI scores are fairly similar indicating that branch prediction does not have a major impact on CPI. The type of the predictor does not seem to have much of an impact, with 2-bit predictors consistently outperforming 1-bit predictors, but not by much. Therefore, the additional complexity of a 2-bit predictor may not be worthwhile.

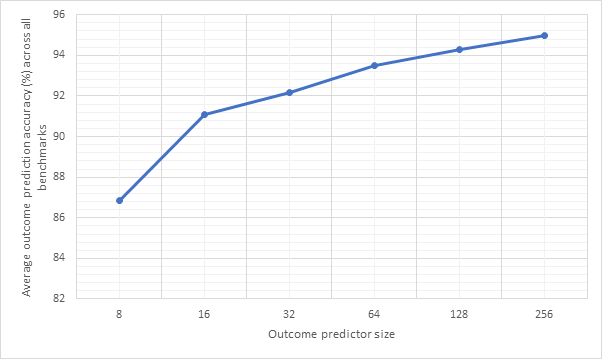

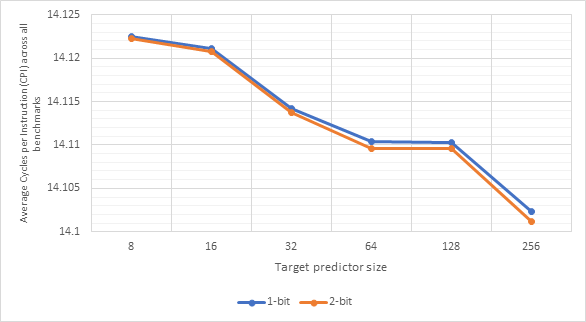

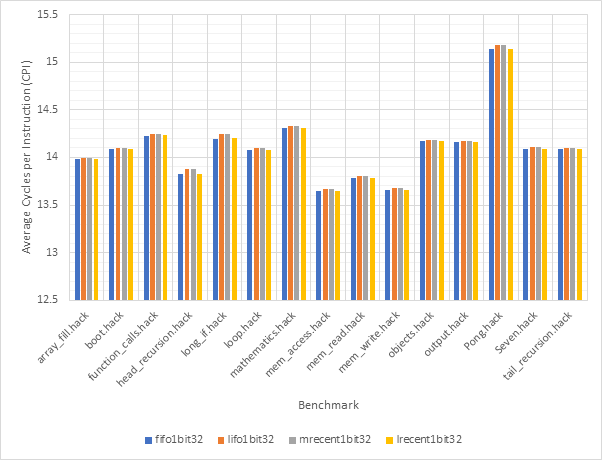

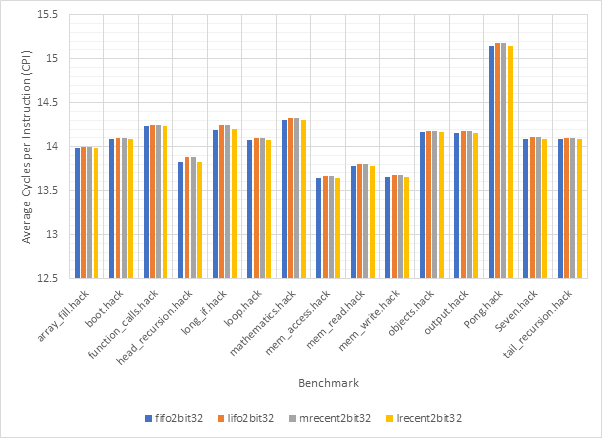

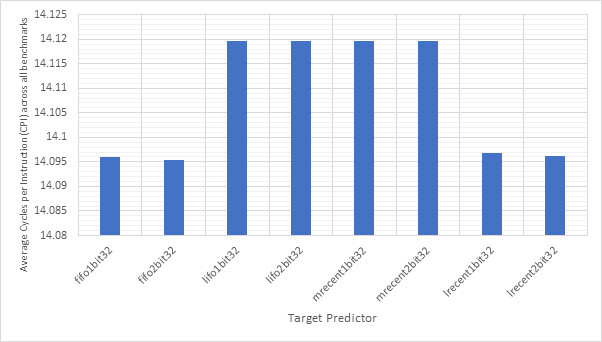

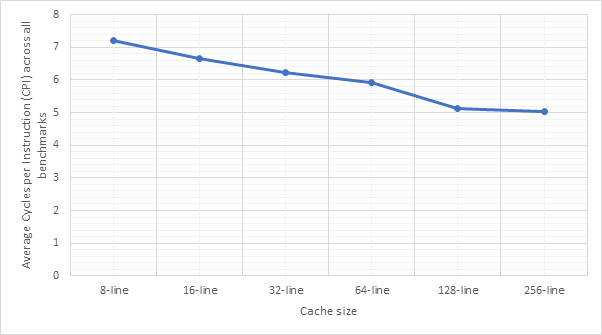

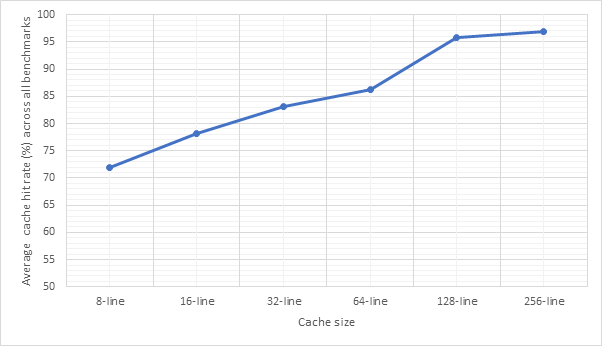

Figure 30 and Figure 31 show the relationship between target predictor size and CPI or predictor accuracy respectively. Unsurprisingly, larger target predictors offer better performance as they are able to store information about more addresses without needing to make an eviction. The sweet spot is not as clear this time, with the most notable performance improvements between 16 and 32 and between 128 and 256. The poor performance of the 8- and 16-line predictors suggests that jumps from the same address are far apart from one another meaning that smaller predictors would evict the address before it came up again. The relative stability between 32, 64 and 128 before a much larger improvement at 256 suggests that 256 may be large enough to store most of branch addresses encountered during the benchmarks. Once again, larger predictors are more expensive.

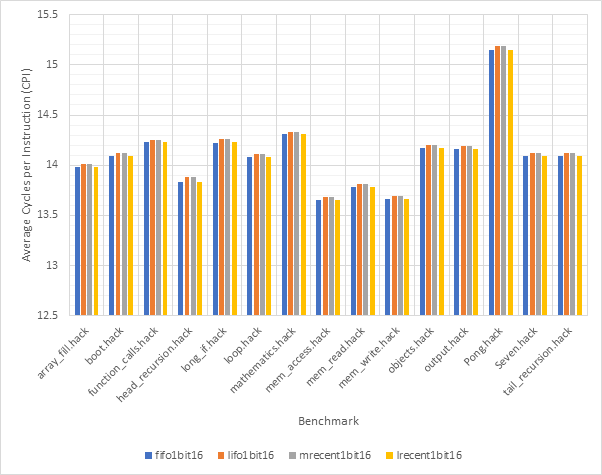

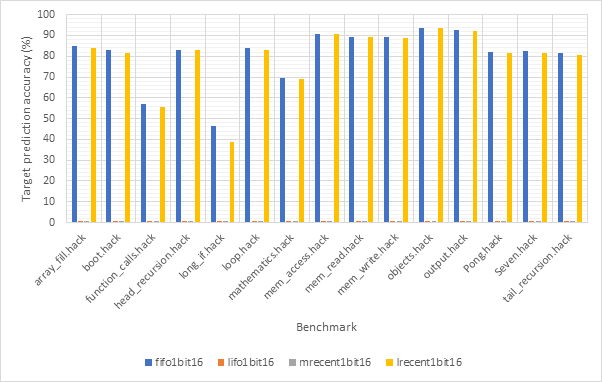

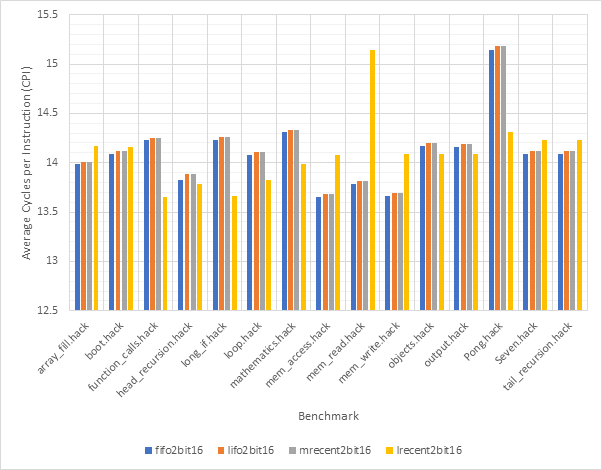

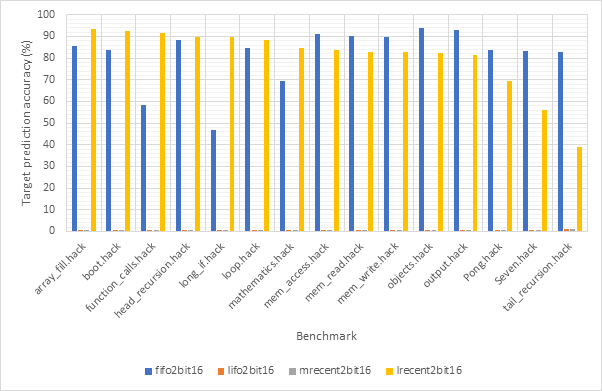

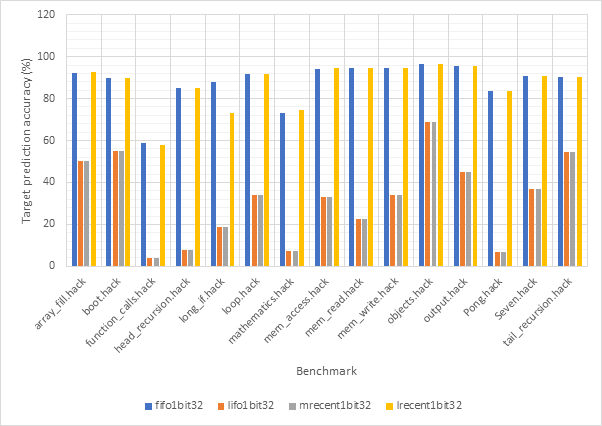

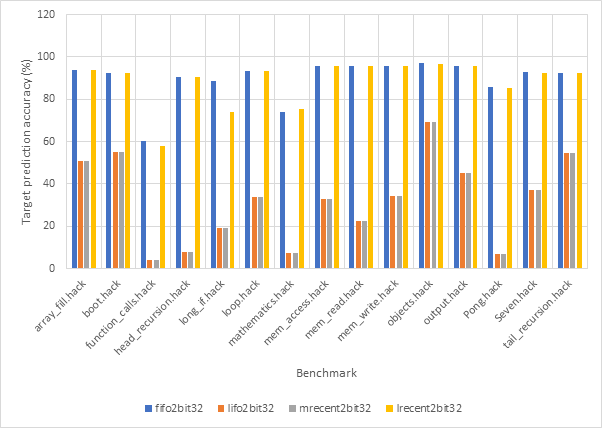

Figure 32 and Figure 33 compare the CPI performance and target prediction accuracy respectively of each of the 16-line 1-bit target predictors across each benchmark individually. The outcome predictor is set to gshare256, the highest accuracy outcome predictor encountered in this project. This is to reduce the impact outcome mispredictions on CPI figures. Figure 34 and Figure 35 show the same information for 16-line 2-bit target predictors, whilst Figure 36 and Figure 37 show this for 32-line 1-bit target predictors. Finally, Figure 38 and Figure 39 show this for 32-line 2-bit target predictors.

Consistent with previous observations, the performance of 1-bit and 2-bit predictors is very similar, potentially making the additional complexity of 2-bit predictors unwise. Also consistent with previous observations, it is clear that the fifo and lrecent eviction policies dramatically outclass the other 2 policies. When comparing 16-line and 32-line predictors, the 32-line predictors have much better accuracy scores, particularly when looking at the lifo and mrecent eviction policies.

Figure 40 and Figure 41 compare the average CPI and target prediction accuracy respectively of all 16-line target predictors (both 1-bit and 2-bit) across all benchmarks using the gshare256 outcome predictor. Figure 42 and Figure 43 show the same information for 32-line target predictors. Once again, the comparatively poor performance of the lifo and mrecent strategies are highlighted, although performance does improve for the 32-line version. The fifo and lrecent policies demonstrate similar performance again and since the lrecent strategy is more complex, it is likely not worth the additional expense. The same argument can be made for 1-bit predictors over 2-bit models, the performance gain may not be large enough to worth the extra expense.

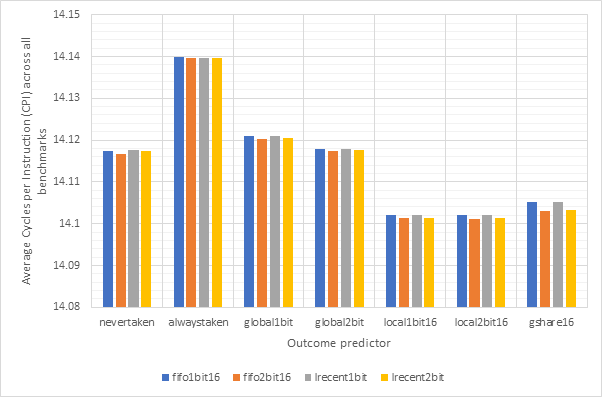

Branch Prediction

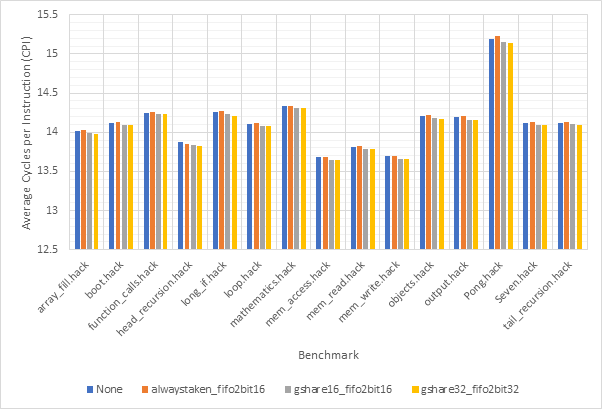

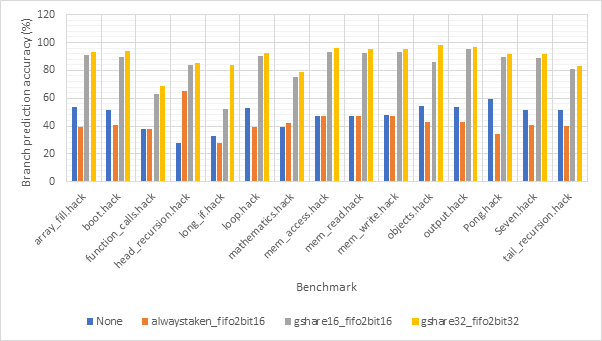

With both the target and outcome components of branch prediction now explored in detail, it’s time to take a look at branch prediction in general. Figure 44 and Figure 45 show the average CPI and branch prediction accuracy respectively for each combination of 16-line target and outcome predictors. Figure 46 and Figure 47 show the same information for 32-line target and outcome predictors. These graphs do not include the lifo or mrecent target prediction eviction policies as the poor performance of these policies excludes them from further consideration. Here we can see that within a specified size, the selection of target predictor does not make much of a difference to the performance, however the 32-line predictors do offer significantly improved accuracy scores. The selection of outcome predictor has a larger influence on results and local2bit and gshare show themselves to be the best outcome predictors once more.

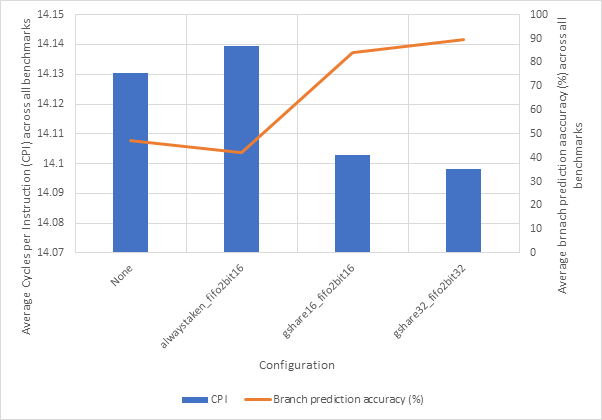

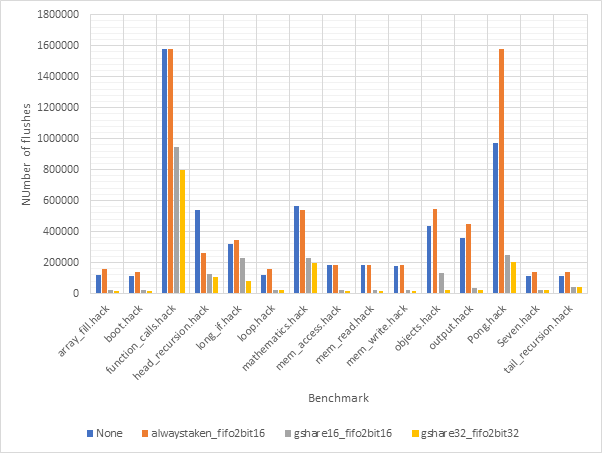

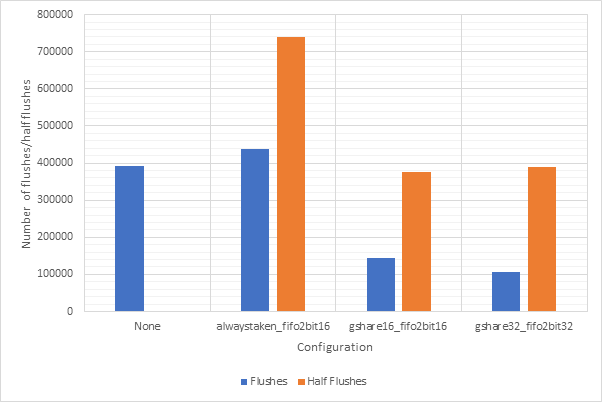

Figure 48 and Figure 49 show the average CPI and branch prediction accuracy respectively of 4 configurations for each benchmark individually. The configurations are no predictor, always taken with fifo2bit16, gshare16 with fifo2bit16 and gshare32 with fifo2bit32. Here, the two gshare-based predictors show themselves to be the best option, with the 32-line variant offering a slight performance edge over the 16-line variant. Previous observations suggested that there gshare32 did not offer much of a performance advantage over gshare32 and so these gains are likely due to the improvements offered by fifo2bit32 over fifo2bit16. Therefore, a hybrid approach of 16-line outcome predictors and 32-line target predictors may be the way to go.

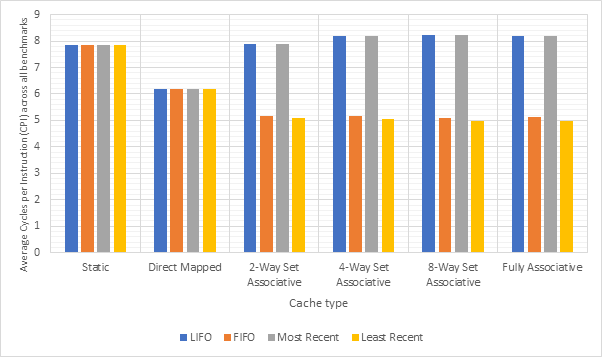

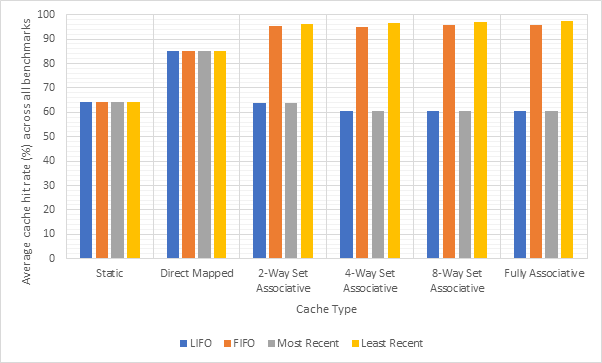

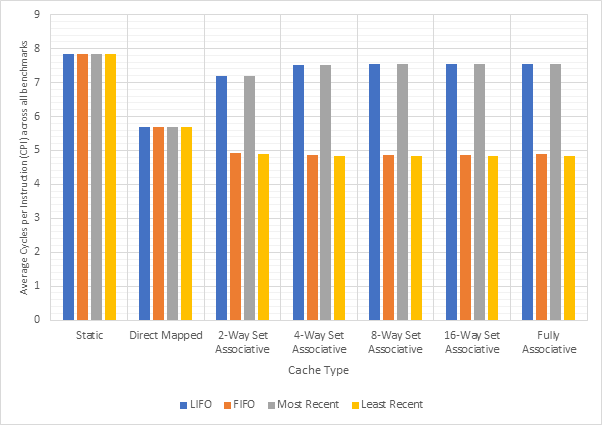

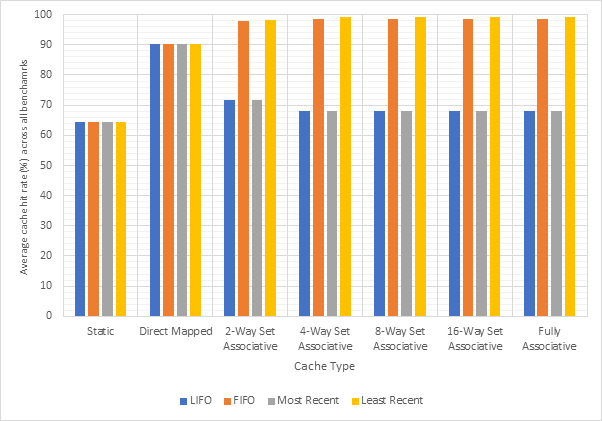

Figure 50 shows the relationship between branch prediction accuracy and average CPI performance for the 4 branch prediction configurations discussed above. This graph clearly shows the high negative correlation between branch prediction accuracy and CPI.