A Brief History of Computing

Inspired by a recent trip to the Computer History Museum in Mountain View, California, here is a brief history of computing:

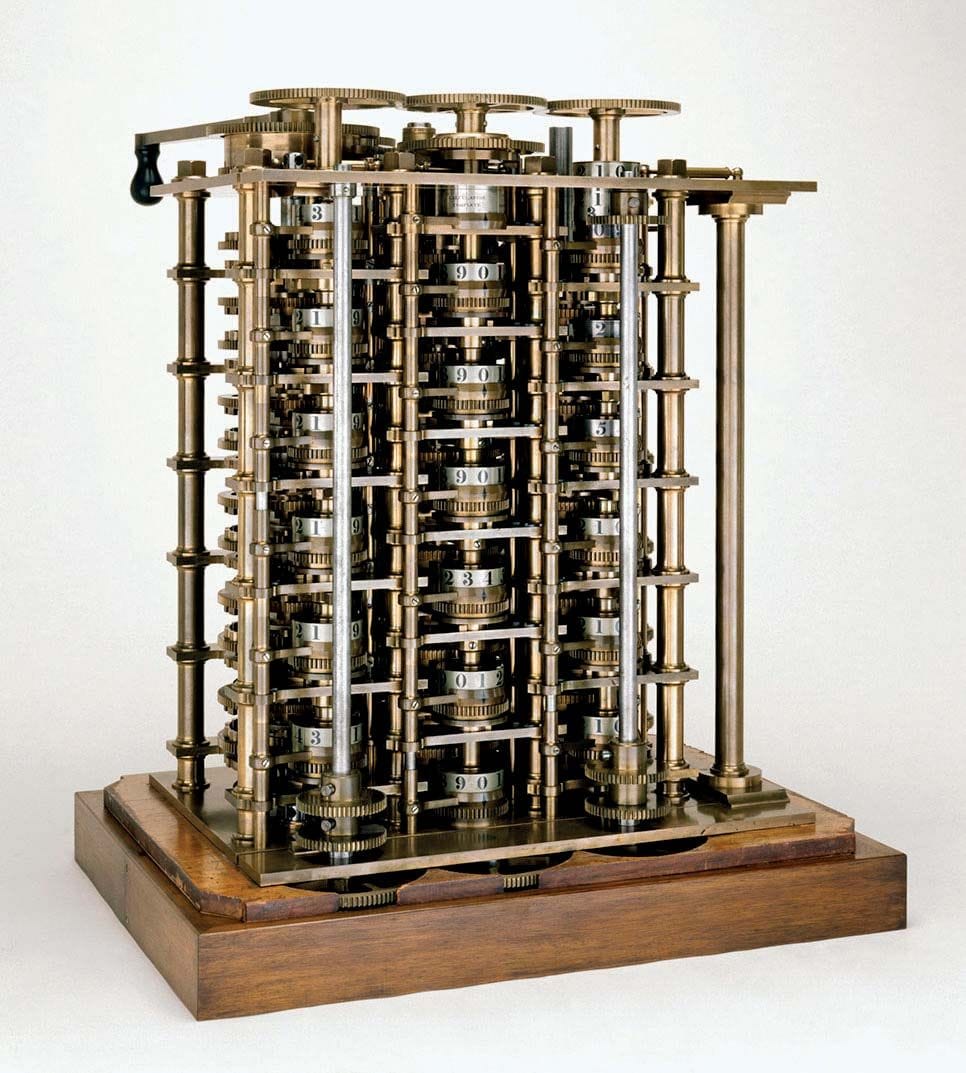

By 1822, Charles Babbage had built his 1st Difference Engine. This was arguably the first mechanical computer and could operate on 8 digit numbers. It was hand-cranked and worked by turning gears.

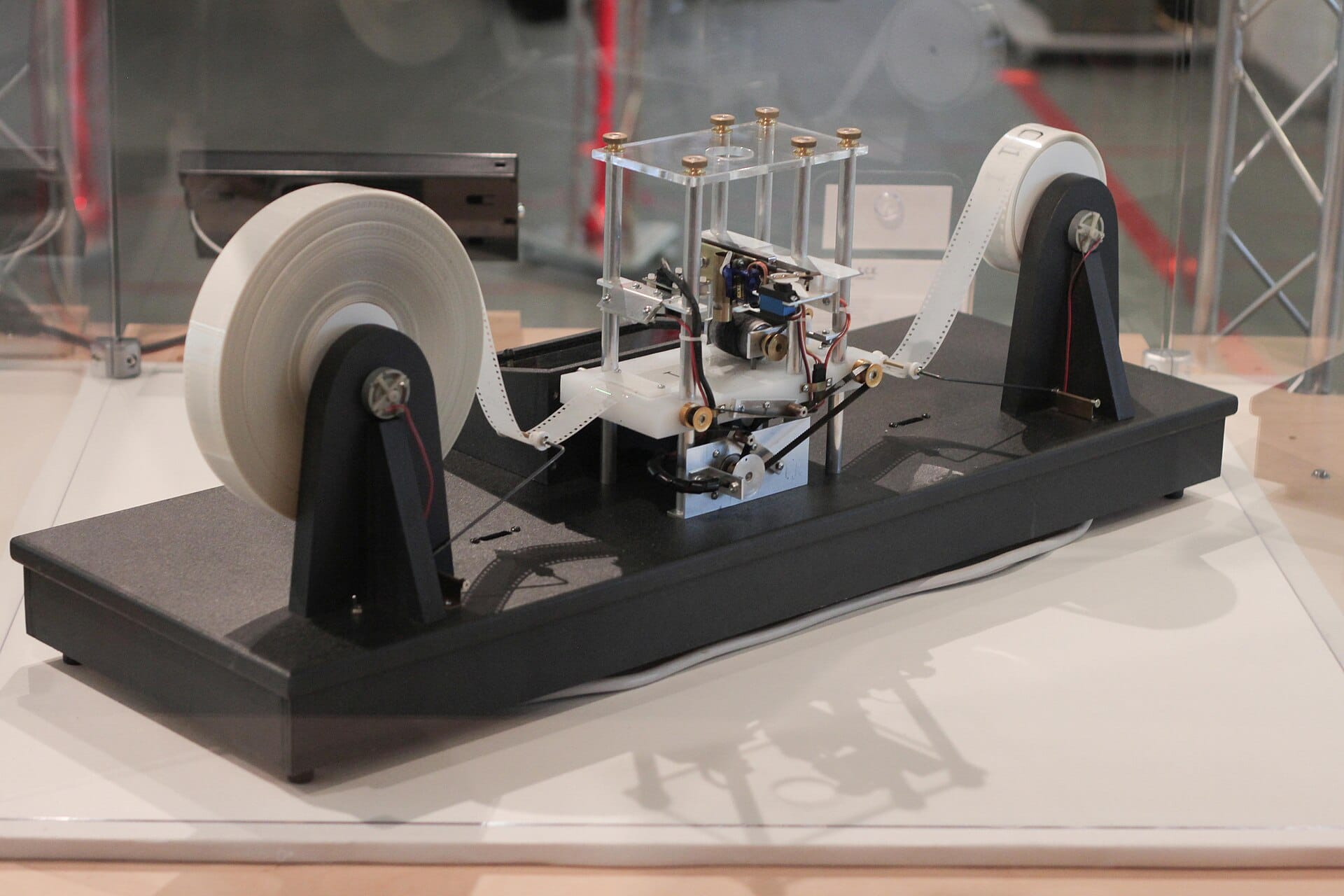

In 1936, Alan Turing introduces his “Universal Machine”. This thought experiment involves a machine manipulating symbols on tape based on a set of rules and would be capable of performing any algorithm. We now call it a “Turing Machine” and it forms the basis of modern computing.

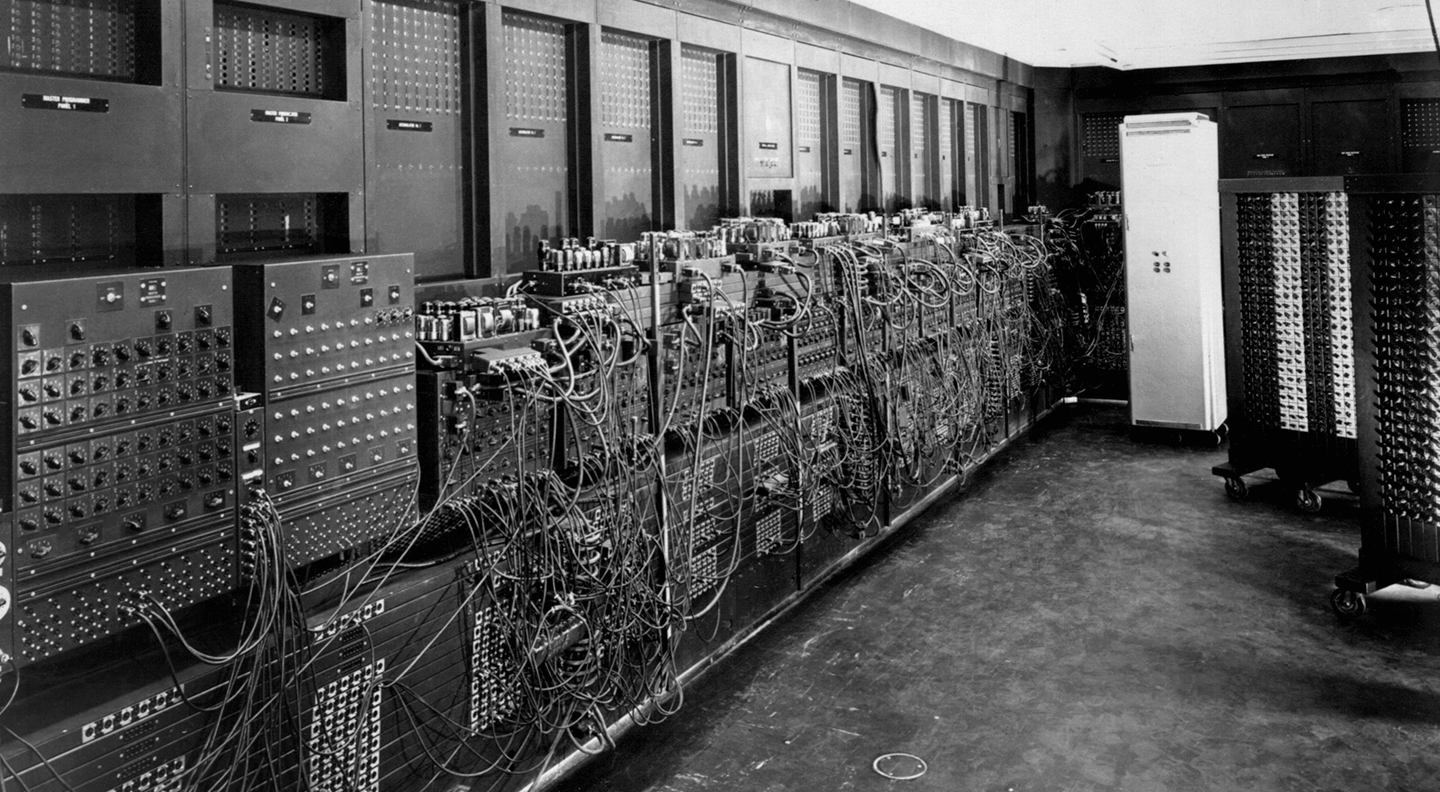

In 1945, the first programmable electronic computer was built at University of Pennsylvania. ENIAC took up a whole room but was much faster then older designs as it did not require gears or cams. ENIAC was also Turing-complete meaning it could be re-programmed with any algorithm.

In 1949, the Electronic Delay Automatic Calculator (EDSAC) was developed by the University of Cambridge. EDSAC was the first (practical) stored-program computer, meaning programs could be loaded onto the machine without physical re-configuration as earlier machines required.

The invention of the integrated circuits (computer chips) in 1958 pave the way for the first mini-computer, DEC's PDP-1 in 1960. Smaller and more affordable then previous machines, the PDP-1 makes computing economically viable and later versions are where UNIX and C are born.

Whilst home computers were available to hobbyists and enthusiasts, computing became widely available with the release of the IBM PC in 1981. Competitors were able to clone IBM's design and create an open standard. Today, modern computers are still very similar to the original PC.

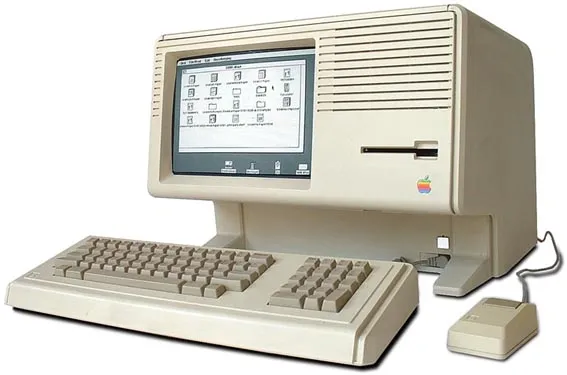

In 1983, Apple releases the first personal computer featuring a Graphical User Interface (GUI), the LISA. The LISA was too expensive to be a success, but Apple followed up with the hugely successful Macintosh a year later. The next year, Microsoft responds with Windows 1.0.

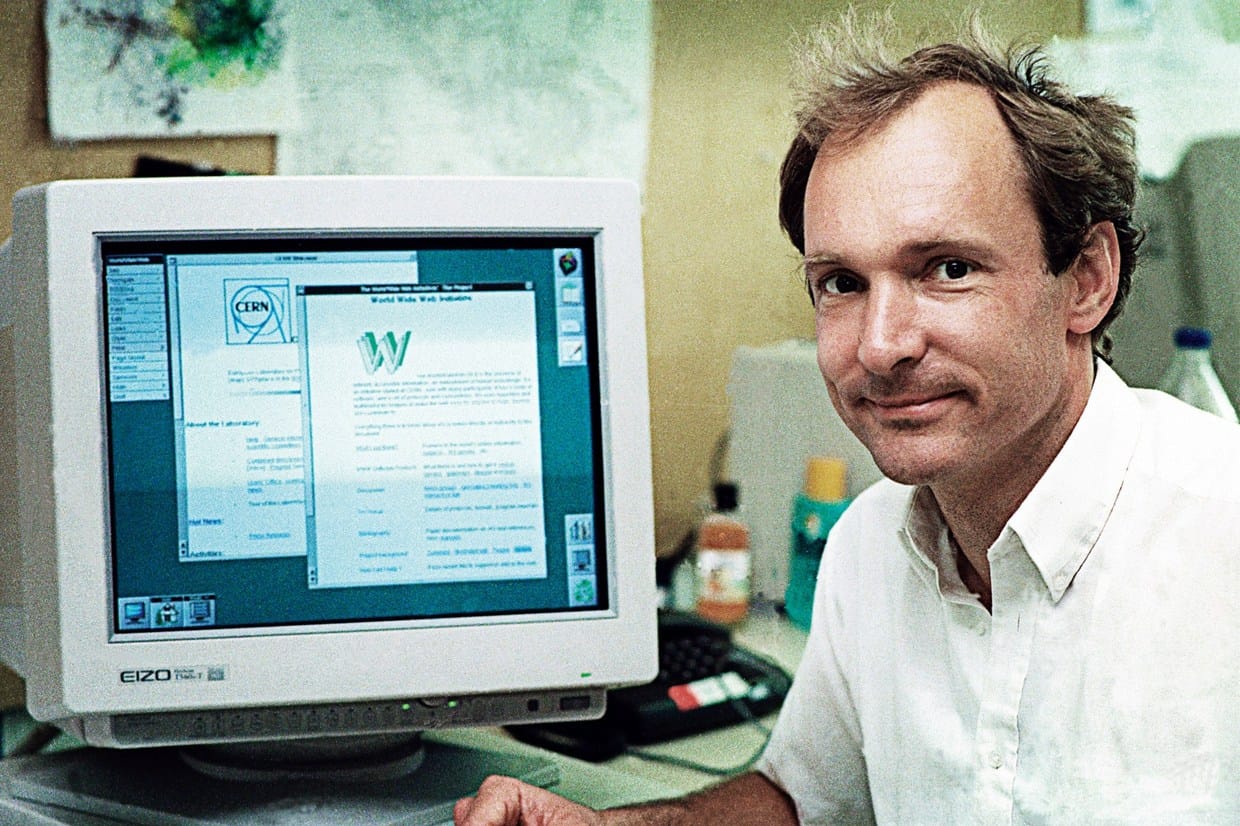

The precursor to the internet, ARPANET was originally established in 1969. The introduction of the TCP/IP stack in 1982 laid the foundations for the World Wide Web. In 1990, Tim Bernards-Lee developed HTML and the first web browser, ushering in the internet as we know it today.

In 2007, Steve Jobs introduced the iPhone to the world. Whilst not the first smartphone, the iPhone finally brought pocket-sized computing to the masses. The modern era of always-on, always-connected computing can be traced back to this pivotal moment.

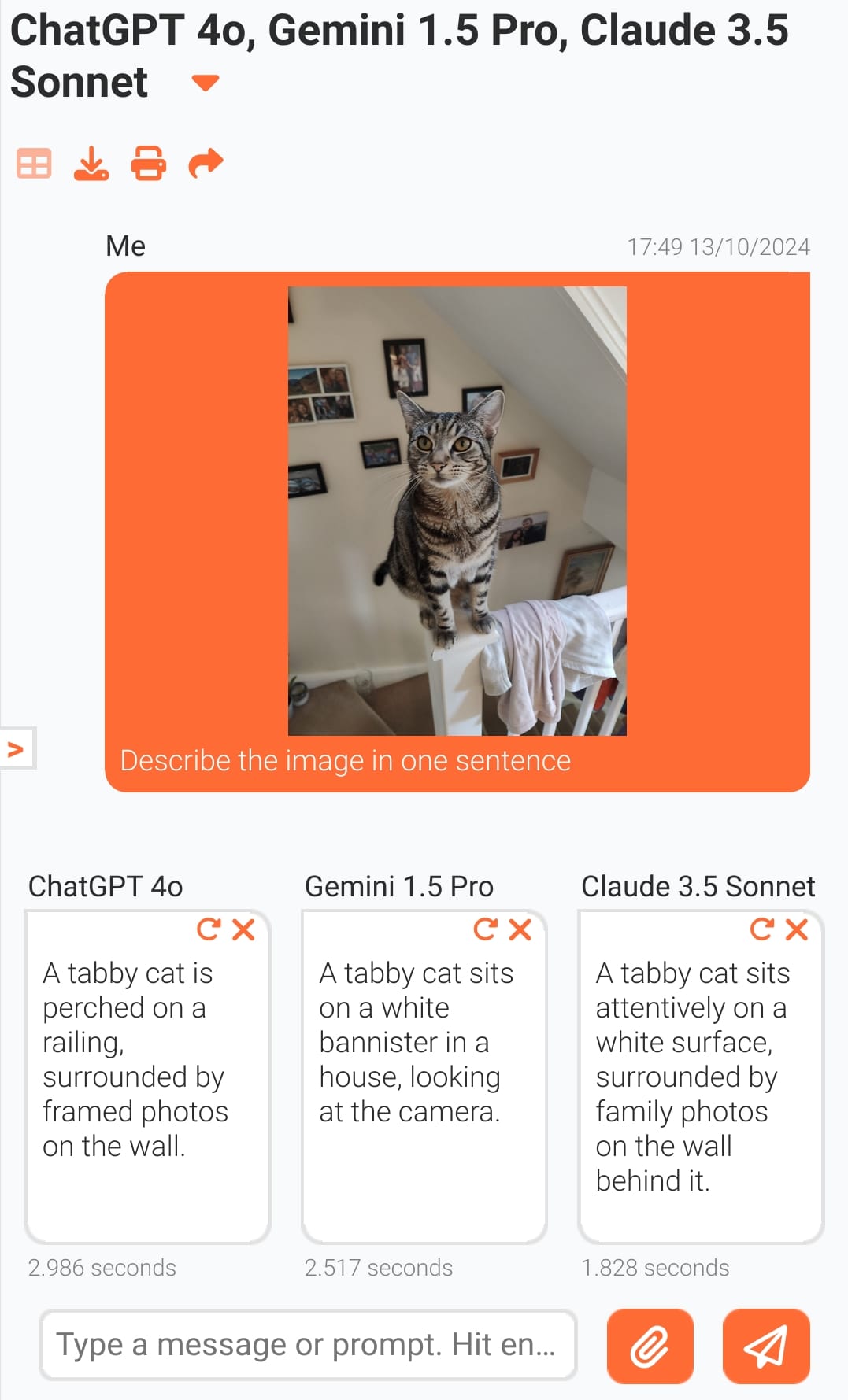

Today, we stand on the precipice of new revolution in computing - Artificial Intelligence. AI is not new, it has been around since the 1960s, but recent advances in processing power together with the vast quantity of data available on the internet have allowed it to flourish.

LLMs were originally conceived by Google in 2017. OpenAI brought ChatGPT to the world in 2022. In the two years since, we have seen an explosion in AI models and performance. Take a look at what's possible today by exploring and comparing the leading AI models with my AI plaform AnyModel.