Generating Cartoon Faces with GAN

In a final year group project, myself and a number of friends formed a small research group experimenting with different methods of using artificial intelligence to generate images. We decided to generate cartoon (anime) faces as we were able to find a suitable dataset online (https://www.kaggle.com/splcher/animefacedataset). Each member of our group took a different approach, I decided to adopt a GAN-based approach.

GAN stands for Generative Adversarial Network and is a technique in which two neural networks compete against each other in a contest. In this case, one network (the generator) would generate images whilst a second (the discriminator) would guess whether the image was real (from the dataset) or fake (generated by the generator). The two networks are trained in tandem and so improve at a similar rate. Initially the images look nothing like the dataset, but the discriminator also hasn't yet learnt how to tell them apart. As the discriminator improves at telling the images apart, the generator must produce more realistic images in order to trick it.

There are a number of possible neural architectures for both the discriminator and generator. In my research I focused on experimenting with dense and convolution layers in various configurations. I was eventually able to produce surprisingly realistic images with both architectures.

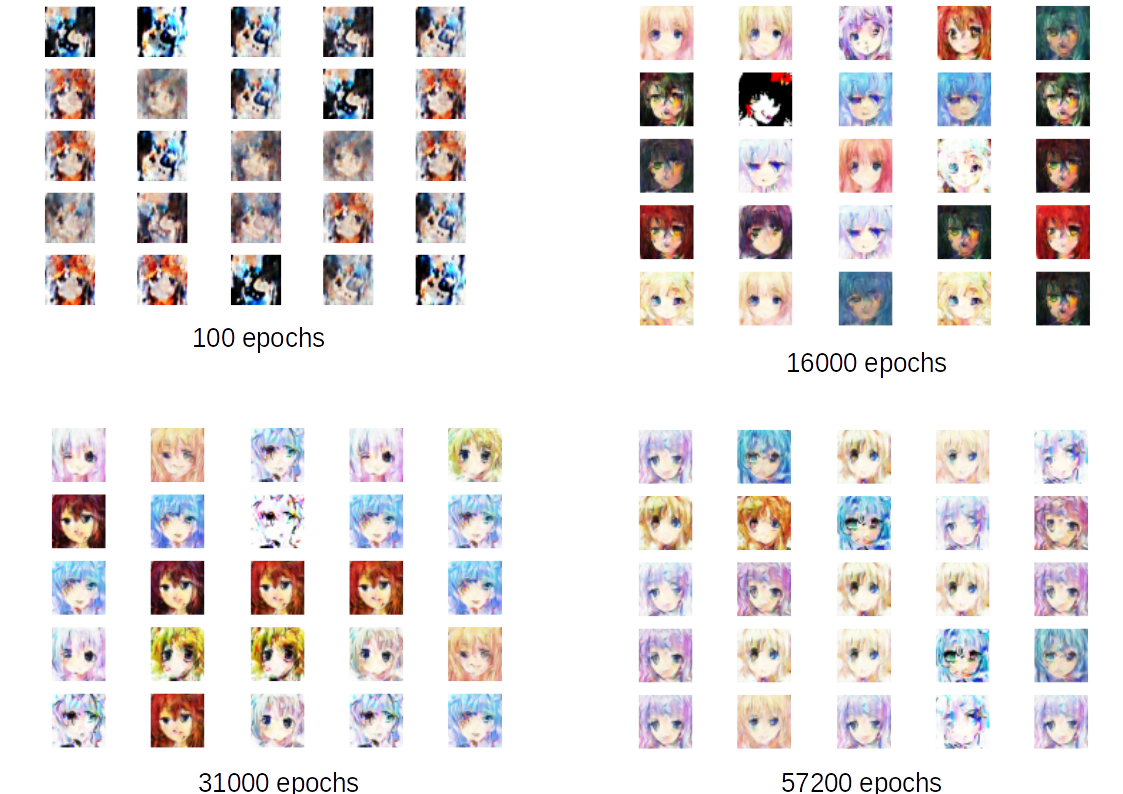

Dense Architectures

Dense architectures trained much more quickly, but required substantially more training iterations (epochs) to generate high quality images. Dense architectures generally produced more pixelated images then Convolutional.

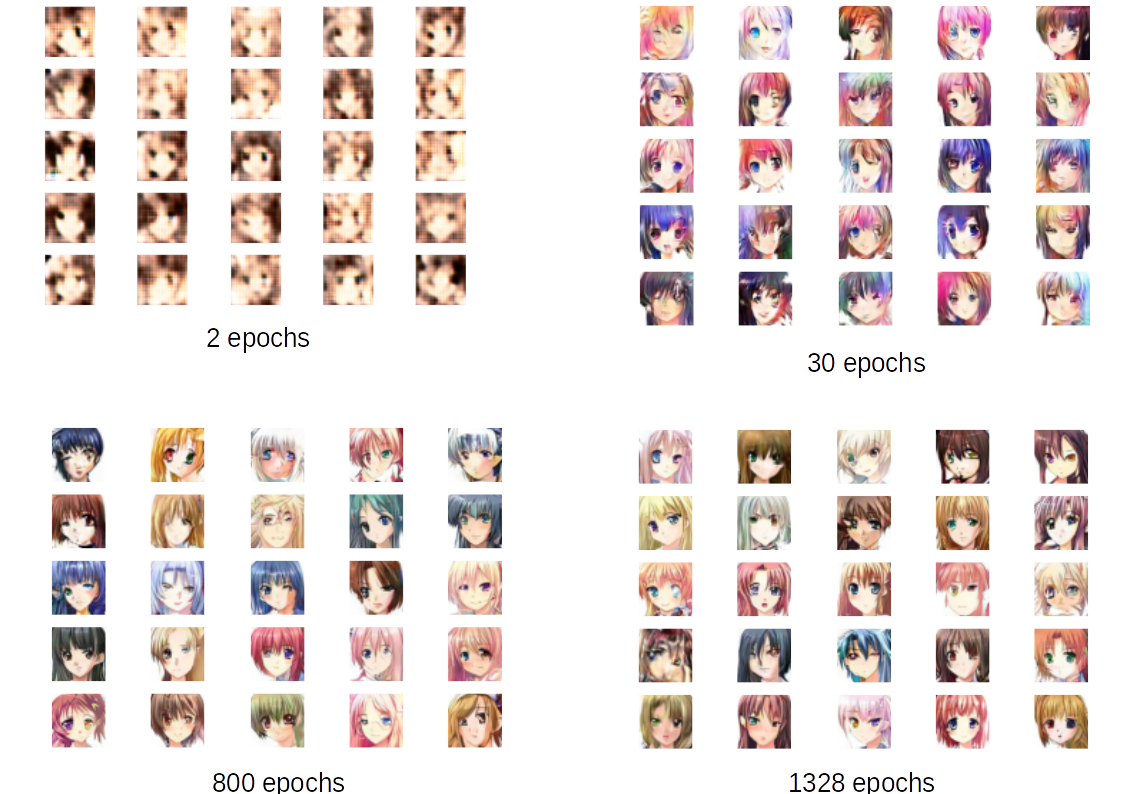

Convolutional

Convolutional architectures trained much more slowly then Dense, however they were able to produce high quality images in far fewer epochs. The convolutional networks produce far better images then the dense architectures, however they also required significantly more computing power to train. It took several days of continuous computation to produce good results, and my Nvidia RTX 2070 was maxed out the whole time with my computer unable to do anything else productive. This contrasted to the Dense architectures which did not seem to strain the computer to the same extent.

As you can see from the images above, the networks were eventually able to produce very realistic images. However if you look closely you will notice a few defects, particularly in and around the eyes.

Mode Collapse

If you look closely at some of the sample images, you can see that many of the faces often look very similar. This is a major problem with GANs and is known as mode collapse. Mode collapse occurs once the generator discovers a weakness in the discriminator and exploits it disproporionately and is similar to overfitting in other areas of AI. Once the generator has discovered an image that seems plausible to the discriminator it continues to produce many similar images as it knows this is "safe". This is usually not what you want, as typically a variety of images is desirable. Mode collapse seemed more common in the Dense architectures. Unfortunately, once mode collapse has occured, there is not much that can be done, and typically training has to be abandoned and restarted from the last known checkpoint that did not suffer from overfitting.

Notebook

You can download my jupyter-notebook here: https://www.jbm.fyi/static/gan.ipynb

This notebook explains in detail what it is doing, so it should be a good starting point for anyone trying to do something similar.